Ref: Tomorrow starts here – Cisco (via algopop)

Ref: Tomorrow starts here – Cisco (via algopop)

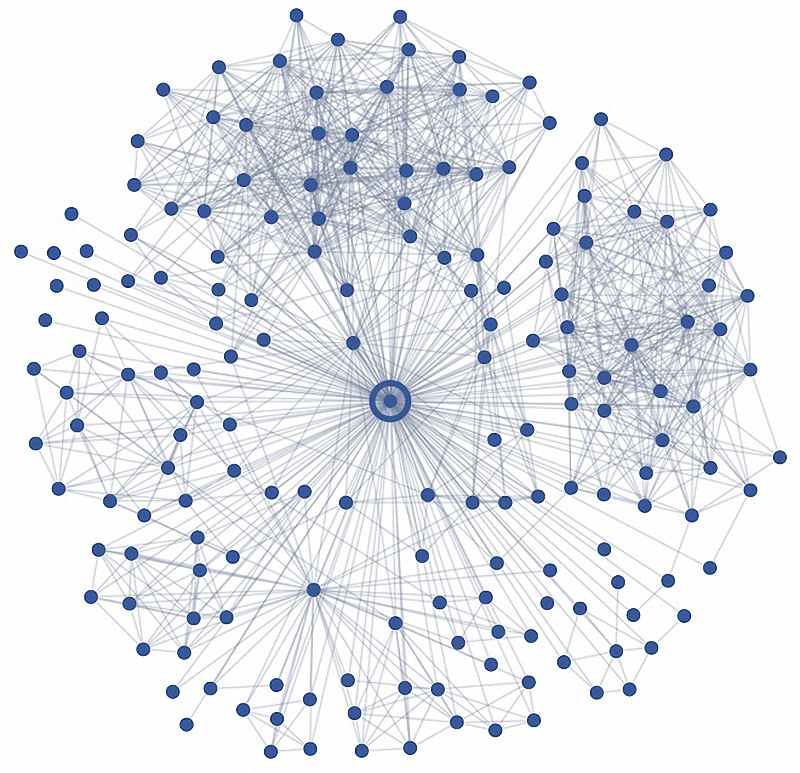

Today, Eduardo Graells-Garrido at the Universitat Pompeu Fabra in Barcelona as well as Mounia Lalmas and Daniel Quercia, both at Yahoo Labs, say they’ve hit on a way to burst the filter bubble. Their idea that although people may have opposing views on sensitive topics, they may also share interests in other areas. And they’ve built a recommendation engine that points these kinds of people towards each other based on their own preferences.

The result is that individuals are exposed to a much wider range of opinions, ideas and people than they would otherwise experience. And because this is done using their own interests, they end up being equally satisfied with the results (although not without a period of acclimitisation). “We nudge users to read content from people who may have opposite views, or high view gaps, in those issues, while still being relevant according to their preferences,” say Graells-Garrido and co.

[…]

The results show that people can be more open than expected to ideas that oppose their own. It turns out that users who openly speak about sensitive issues are more open to receive recommendations authored by people with opposing views, say Graells-Garrido and co.

They also say that challenging people with new ideas makes them generally more receptive to change. That has important implications for social media sites. There is good evidence that users can sometimes become so resistant to change than any form of redesign dramatically reduces the popularity of the service. Giving them a greater range of content could change that.

Ref: How to Burst the “Filter Bubble” that Protects Us from Opposing Views – MIT Technology Review

At least, not yet. But you might think so if you’ve read the internet lately, which has has been abuzz with a new study that shows how an algorithm can accurately guess a user’s romantic partner or foretell a potential breakup based on the structure of his or her social network. All this is true—sort of. (We’ll get to that in a bit). More than anything, the algorithms and how they work demonstrate how Facebook is inching closer to producing predictive, even counterintuitive insights about our lives.

[…]

By analyzing social networks with the dispersion algorithm rather than embeddedness, Kleinberg and Backstrom were able to accurately guess a user’s spouse correctly 60 percent of the time and a non-marital romantic partner nearly 50 percent of the time. Those are pretty impressive numbers, given that the data set comprised 1.3 million Facebook random users who were at least 20 years old, had between 50 and 2,000 friends and noted some form or relationship status on their profile. (The odds of guessing a randomly guessing a partner would thus range between 1-in-50 to 1-in-200—or between 30 and 120 times less than the results achieved by Kleinberg and Backstrom.)

[…]

But perhaps the most fascinating idea within the research came when you flip this formulation around. What happens to relationships when people’s social networks aren’t many-tentacled? Turns out, in cases where there was low dispersion (where the couple had a lot of mutual friends, but from distinct social circles), couples were 50 percent more likely to change their status to “single.” Put another way, having social networks that mirror each other too closely in one particular part of your life seems to result in more transitory romances. There’s a common sense way of reframing this idea: After all, how many people have you broken up with right around the time that it became clear that your friend circles weren’t gelling?

Ref: Facebook Inches Closer to Figuring Out the Formula for Love – Wired

A mild-mannered man says his life was completely ruined after Google’s autocomplete feature convinced the government he was building a bomb.

Though he intended to search the web for “How do I build a radio-controlled airplane,” Jeffrey Kantor, then a government contractor, says the search engine auto-completed his request, turning it into “”How do I build a radio controlled bomb?”

Before he realized Google’s error, Kantor had already pressed enter, sparking a chain reaction he says resulted in months of harassment by government officials leading up to his eventual termination.

Ref: Man Says Google’s Autocomplete Feature Destroyed His Life – Gawker

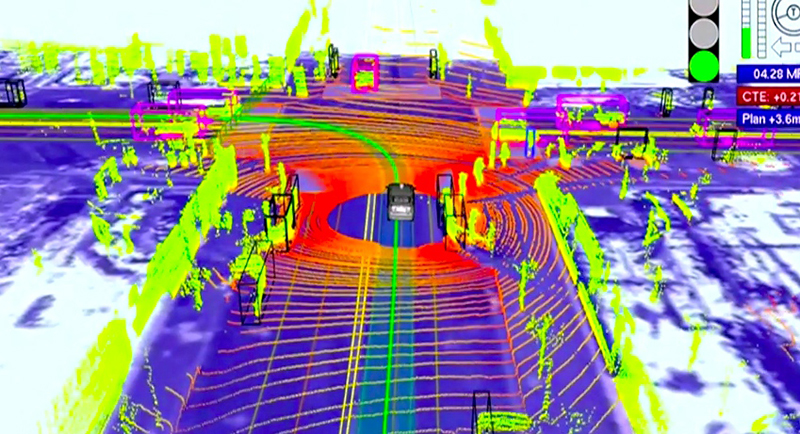

Impressive and touching as this demonstration is, it is also deceptive. Google’s cars follow a route that has already been driven at least once by a human, and a driver always sits behind the wheel, or in the passenger seat, in case of mishap. This isn’t purely to reassure pedestrians and other motorists. No system can yet match a human driver’s ability to respond to the unexpected, and sudden failure could be catastrophic at high speed.

But if autonomy requires constant supervision, it can also discourage it. Back in his office, Reimer showed me a chart that illustrates the relationship between a driver’s performance and the number of things he or she is doing. Unsurprisingly, at one end of the chart, performance drops dramatically as distraction increases. At the other end, however, where there is too little to keep the driver engaged, performance drops as well. Someone who is daydreaming while the car drives itself will be unprepared to take control when necessary.

Reimer also worries that relying too much on autonomy could cause drivers’ skills to atrophy. A parallel can be found in airplanes, where increasing reliance on autopilot technology over the past few decades has been blamed for reducing pilots’ manual flying abilities. A 2011 draft report commissioned by the Federal Aviation Administration suggested that overreliance on automation may have contributed to several recent crashes involving pilot error. Reimer thinks the same could happen to drivers. “Highly automated driving will reduce the actual physical miles driven, and a driver who loses half the miles driven is not going to be the same driver afterward,” he says. “By and large we’re forgetting about an important problem: how do you connect the human brain to this technology?”

Norman argues that autonomy also needs to be more attuned to how the driver is feeling. “As machines start to take over more and more, they need to be socialized; they need to improve the way they communicate and interact,” he writes. Reimer and colleagues at MIT have shown how this might be achieved, with a system that estimates a driver’s mental workload and attentiveness by using sensors on the dashboard to measure heart rate, skin conductance, and eye movement. This setup would inform a kind of adaptive automation: the car would make more or less use of its autonomous features depending on the driver’s level of distraction or engagement.

Ref: Proceed with Caution toward the Self-Driving Car – MIT Technology Review

Several computers inside the car’s trunk perform split-second measurements and calculations, processing data pouring in from the sensors. Software assigns a value to each lane of the road based on the car’s speed and the behavior of nearby vehicles. Using a probabilistic technique that helps cancel out inaccuracies in sensor readings, this software decides whether to switch to another lane, to attempt to pass the car ahead, or to get out of the way of a vehicle approaching from behind. Commands are relayed to a separate computer that controls acceleration, braking, and steering. Yet another computer system monitors the behavior of everything involved with autonomous driving for signs of malfunction.

[…]

For one thing, many of the sensors and computers found in BMW’s car, and in other prototypes, are too expensive to be deployed widely. And achieving even more complete automation will probably mean using more advanced, more expensive sensors and computers. The spinning laser instrument, or LIDAR, seen on the roof of Google’s cars, for instance, provides the best 3-D image of the surrounding world, accurate down to two centimeters, but sells for around $80,000. Such instruments will also need to be miniaturized and redesigned, adding more cost, since few car designers would slap the existing ones on top of a sleek new model.

Cost will be just one factor, though. While several U.S. states have passed laws permitting autonomous cars to be tested on their roads, the National Highway Traffic Safety Administration has yet to devise regulations for testing and certifying the safety and reliability of autonomous features. Two major international treaties, the Vienna Convention on Road Traffic and the Geneva Convention on Road Traffic, may need to be changed for the cars to be used in Europe and the United States, as both documents state that a driver must be in full control of a vehicle at all times.

Most daunting, however, are the remaining computer science and artificial-intelligence challenges. Automated driving will at first be limited to relatively simple situations, mainly highway driving, because the technology still can’t respond to uncertainties posed by oncoming traffic, rotaries, and pedestrians. And drivers will also almost certainly be expected to assume some sort of supervisory role, requiring them to be ready to retake control as soon as the system gets outside its comfort zone.

[…]

The relationship between human and robot driver could be surprisingly fraught. The problem, as I discovered during my BMW test drive, is that it’s all too easy to lose focus, and difficult to get it back. The difficulty of reëngaging distracted drivers is an issue that Bryan Reimer, a research scientist in MIT’s Age Lab, has well documented (see “Proceed with Caution toward the Self-Driving Car,” May/June 2013). Perhaps the “most inhibiting factors” in the development of driverless cars, he suggests, “will be factors related to the human experience.”

In an effort to address this issue, carmakers are thinking about ways to prevent drivers from becoming too distracted, and ways to bring them back to the driving task as smoothly as possible. This may mean monitoring drivers’ attention and alerting them if they’re becoming too disengaged. “The first generations [of autonomous cars] are going to require a driver to intervene at certain points,” Clifford Nass, codirector of Stanford University’s Center for Automotive Research, told me. “It turns out that may be the most dangerous moment for autonomous vehicles. We may have this terrible irony that when the car is driving autonomously it is much safer, but because of the inability of humans to get back in the loop it may ultimately be less safe.”

An important challenge with a system that drives all by itself, but only some of the time, is that it must be able to predict when it may be about to fail, to give the driver enough time to take over. This ability is limited by the range of a car’s sensors and by the inherent difficulty of predicting the outcome of a complex situation. “Maybe the driver is completely distracted,” Werner Huber said. “He takes five, six, seven seconds to come back to the driving task—that means the car has to know [in advance] when its limitation is reached. The challenge is very big.”

Before traveling to Germany, I visited John Leonard, an MIT professor who works on robot navigation, to find out more about the limits of vehicle automation. Leonard led one of the teams involved in the DARPA Urban Challenge, an event in 2007 that saw autonomous vehicles race across mocked-up city streets, complete with stop-sign intersections and moving traffic. The challenge inspired new research and new interest in autonomous driving, but Leonard is restrained in his enthusiasm for the commercial trajectory that autonomous driving has taken since then. “Some of these fundamental questions, about representing the world and being able to predict what might happen—we might still be decades behind humans with our machine technology,” he told me. “There are major, unsolved, difficult issues here. We have to be careful that we don’t overhype how well it works.”

Leonard suggested that much of the technology that has helped autonomous cars deal with complex urban environments in research projects—some of which is used in Google’s cars today—may never be cheap or compact enough to be employed in commercially available vehicles. This includes not just the LIDAR but also an inertial navigation system, which provides precise positioning information by monitoring the vehicle’s own movement and combining the resulting data with differential GPS and a highly accurate digital map. What’s more, poor weather can significantly degrade the reliability of sensors, Leonard said, and it may not always be feasible to rely heavily on a digital map, as so many prototype systems do. “If the system relies on a very accurate prior map, then it has to be robust to the situation of that map being wrong, and the work of keeping those maps up to date shouldn’t be underestimated,” he said.

Ref: Driverless Cars Are Further Away Than You Think – MIT Technology Review

But the game goes deeper. As personal digital assistant apps such as Google Now become widespread, so does the idea of algorithms that can not only meet but anticipate our needs. Extend the concept from the purely digital into the realm of retail, and you have what some industry prognosticators are calling “ambient commerce.” In a sensor-rich future where not just phones but all kinds of objects are internet-connected, same-day delivery becomes just one component of a bigger instant gratification engine.

On the same day Google announced that its Shopping Express was available to all Bay Area residents, eBay Enterprise Marketing Solutions head of strategy John Sheldon was telling a roomful of clients that there will soon come a time when customers won’t be ordering stuff from eBay anymore. Instead, they’ll let their phones do it.

Sheldon believes the “internet of things” is creating a data-saturated environment that will soon envelope commerce. In a chat with WIRED, he describes a few hypothetical examples that sound like they’re already within reach. Imagine setting up a rule in Nike+, he says, to have the app order you a new pair of shoes after you run 300 miles. Or picture a bicycle helmet with a sensor that “knows” when a crash has happened, which prompts an app to order a new one.

Now consider an even more advanced scenario. A shirt has a sensor that detects moisture. And you find yourself stuck out in the rain without an umbrella. Not too many minutes after the downpour starts, a car pulls up alongside you. A courier steps out and hands you an umbrella — or possibly a rain jacket, depending on what rules you set up ahead of time for such a situation, perhaps using IFTTT.

“Ambient commerce is about consumers turning over their trust to the machine,” Sheldon says.

Ref: One Day, Google Will Deliver the Stuff You Want Before You Ask – Wired

If a small tree branch pokes out onto a highway and there’s no incoming traffic, we’d simply drift a little into the opposite lane and drive around it. But an automated car might come to a full stop, as it dutifully observes traffic laws that prohibit crossing a double-yellow line. This unexpected move would avoid bumping the object in front, but then cause a crash with the human drivers behind it.

Should we trust robotic cars to share our road, just because they are programmed to obey the law and avoid crashes?

[…]

Programmers still will need to instruct an automated car on how to act for the entire range of foreseeable scenarios, as well as lay down guiding principles for unforeseen scenarios. So programmers will need to confront this decision, even if we human drivers never have to in the real world. And it matters to the issue of responsibility and ethics whether an act was premeditated (as in the case of programming a robot car) or reflexively without any deliberation (as may be the case with human drivers in sudden crashes).

Anyway, there are many examples of car accidents every day that involve difficult choices, and robot cars will encounter at least those. For instance, if an animal darts in front of our moving car, we need to decide: whether it would be prudent to brake; if so, how hard to brake; whether to continue straight or swerve to the left of right; and so on. These decisions are influenced by environmental conditions (e.g., slippery road), obstacles on and off the road (e.g., other cars to the left and trees to the right), size of an obstacle (e.g., hitting a cow diminishes your survivability, compared to hitting a raccoon), second-order effects (e.g., crash with the car behind us, if we brake too hard), lives at risk in and outside the car (e.g., a baby passenger might mean the robot car should give greater weight to protecting its occupants), and so on.

[…]

In “robot ethics,” most of the attention so far has been focused on military drones. But cars are maybe the most iconic technology in America—forever changing cultural, economic, and political landscapes. They’ve made new forms of work possible and accelerated the pace of business, but they also waste our time in traffic. They rush countless patients to hospitals and deliver basic supplies to rural areas, but also continue to kill more than 30,000 people a year in the U.S. alone. They bring families closer together, but also farther away at the same time. They’re the reason we have suburbs, shopping malls, and fast-food restaurants, but also new environmental and social problems.

Ref: The Ethics of Autonomous Cars – TheAtlantic

[…]

That’s how this puzzle relates to the non-identity problem posed by Oxford philosopher Derek Parfit in 1984. Suppose we face a policy choice of either depleting some natural resource or conserving it. By depleting it, we might raise the quality of life for people who currently exist, but we would decrease the quality of life for future generations; they would no longer have access to the same resource.

Say that the best we could do is make robot cars reduce traffic fatalities by 1,000 lives. That’s still pretty good. But if they did so by saving all 32,000 would-be victims while causing 31,000 entirely new victims, we wouldn’t be so quick to accept this trade — even if there’s a net savings of lives.

[…]

With this new set of victims, however, are we violating their right not to be killed? Not necessarily. If we view the right not to be killed as the right not to be an accident victim, well, no one has that right to begin with. We’re surrounded by both good luck and bad luck: accidents happen. (Even deontological – duty-based — or Kantian ethics could see this shift in the victim class as morally permissible given a non-violation of rights or duties, in addition to the consequentialist reasons based on numbers.)

[…]

Ethical dilemmas with robot cars aren’t just theoretical, and many new applied problems could arise: emergencies, abuse, theft, equipment failure, manual overrides, and many more that represent the spectrum of scenarios drivers currently face every day.

One of the most popular examples is the school-bus variant of the classic trolley problem in philosophy: On a narrow road, your robotic car detects an imminent head-on crash with a non-robotic vehicle — a school bus full of kids, or perhaps a carload of teenagers bent on playing “chicken” with you, knowing that your car is programmed to avoid crashes. Your car, naturally, swerves to avoid the crash, sending it into a ditch or a tree and killing you in the process.

At least with the bus, this is probably the right thing to do: to sacrifice yourself to save 30 or so schoolchildren. The automated car was stuck in a no-win situation and chose the lesser evil; it couldn’t plot a better solution than a human could.

But consider this: Do we now need a peek under the algorithmic hood before we purchase or ride in a robot car? Should the car’s crash-avoidance feature, and possible exploitations of it, be something explicitly disclosed to owners and their passengers — or even signaled to nearby pedestrians? Shouldn’t informed consent be required to operate or ride in something that may purposely cause our own deaths?

It’s one thing when you, the driver, makes a choice to sacrifice yourself. But it’s quite another for a machine to make that decision for you involuntarily.

Ref: The Ethics of Saving Lives With Autonomous Cars Are Far Murkier Than You Think – Wired

Ref: Ethics + Emerging Sciences Group

Walking is healthy, so all of the pundits and experts say. While this simple cardiovascular activity may be essential for our inner organs, it can wreak havoc on our outer ones. Case in point? The ankle and the knee are especially susceptible to walking-related strain and injury. A whole line of “safe” shoes have entered the market to sate the demand of consumers looking for a less impactful way to walk or run. Now, there is an awesome tech-heavy exoskeleton that turns us from walkers to, uh, robotic super-walkers. We won’t be able to leap a building in a single bound but maybe we can get close.

Ref: Yaskawa développe une robot pour l’aide à la marche – Humanoïde