Monthly Archives: December 2012

How Artificial Intelligence Sees Us

Right now, there is a neural network of 1,000 computers at Google’s X lab that has taught itself to recognize humans and cats on the internet. But the network has also learned to recognize some weirder things, too. What can this machine’s unprecedented new capability teach us about what future artificial intelligences might actually be like

This is, to me, the most interesting part of the research. What are the patterns in human existence that jump out to non-human intelligences? Certainly 10 million videos from YouTube do not comprise the whole of human existence, but it is a pretty good start. They reveal a lot of things about us we might not have realized, like a propensity to orient tools at 30 degrees. Why does this matter, you ask? It doesn’t matter to you, because you’re human. But it matters to XNet.

What else will matter to XNet? Will it really discern a meaningful difference between cats and humans? What about the difference between a tool and a human body? This kind of question is a major concern for University of Oxford philosopher Nick Bostrom, who has written about the need to program AIs so that they don’t display a “lethal indifference” to humanity. In other words, he’s not as worried about a Skynet scenario where the AIs want to crush humans — he’s worried that AIs won’t recognize humans as being any more interesting than, say, a spatula. This becomes a problem if, as MIT roboticist Cynthia Breazeal has speculated, human-equivalent machine minds won’t emerge until we put them into robot bodies. What if XNet exists in a thousand robots, and they all decide for some weird reason that humans should stand completely still at 30 degree angles? That’s some lethal indifference right there.

Ref: How artificial intelligences will see us – io9

Ref: Building High-level Features Using Large Scale Unsupervised Learning – Google Research

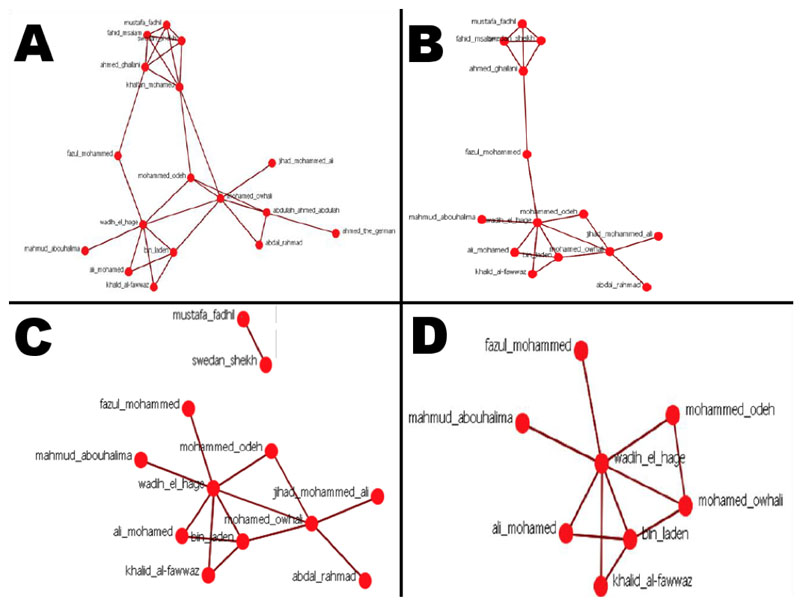

Death by Algorithm : Which Terrorist should Disappear First?

The West Point Team have created an algorithm, called GREEDY_FRAGILE, it shows a greed of connection in between terrorists. The aim of the algorithm is to visualise who should be killed in order to weaken a network.

But could human lives be treated just as another mathematical problem?

The problem is that a math model, like a metaphor, is a simplification. This type of modeling came out of the sciences, where the behavior of particles in a fluid, for example, is predictable according to the laws of physics.

In so many Big Data applications, a math model attaches a crisp number to human behavior, interests and preferences. The peril of that approach, as in finance, was the subject of a recent book by Emanuel Derman, a former quant at Goldman Sachs and now a professor at Columbia University. Its title is “Models. Behaving. Badly.”

Ref: Death by Algorithm: West Point Code Shows Which Terrorists Should Disappear First – Wired

Ref: Shaping Operations to Attack Robust Terror Networks – United States Military Academy

Ref: Sure, Big Data Is Great. But So Is Intuition. – The New York Times

Ray Kurzweil joins Google as Director of Engineering

What are the future plans/agenda of Google? Knowing the views that Ray Kurzweil has on human beings (cf: singularity).

“In 1999, I said that in about a decade we would see technologies such as self-driving cars and mobile phones that could answer your questions, and people criticized these predictions as unrealistic. Fast forward a decade — Google has demonstrated self-driving cars, and people are indeed asking questions of their Android phones. It’s easy to shrug our collective shoulders as if these technologies have always been around, but we’re really on a remarkable trajectory of quickening innovation, and Google is at the forefront of much of this development.

“I’m thrilled to be teaming up with Google to work on some of the hardest problems in computer science so we can turn the next decade’s ‘unrealistic’ visions into reality.”

Singularity Hub reached out to Kurzweil to learn what he and Google aim to accomplish. He told us, “We hope to combine my fifty years of experience in thinking about thinking with Google scale resources (in everything—engineering, computing, communications, data, users) to create truly useful AI that will make all of us smarter.”

Ref: Kurzweil joins Google to work on new projects involving machine learning and language processing – Kurzweilai

Ref: Ray Kurzweil Teams Up With Google to Tackle AI – SingularityHub

Peter Thiel Interview on the Future of Technology

Question from the audience: How could you ever design a system that responds unpredictably? A cat or gorilla responds to stimulus unpredictably. But computers respond predictably.

Peter Thiel: There are a lot of ways in which computers already respond unpredictably. Microsoft Windows crashes unpredictably. Chess computers make unpredictable moves. These systems are deterministic, of course, in that they’ve been programed. But often it’s not at all clear to their users what they’ll actually do. What move will Deep Blue make next? Practically speaking, we don know. What we do know is that the computer will play chess.

It’s harder if you have a computer that is smarter than humans. This becomes almost a theological question. If God always answers your prayers when you pray, maybe it’s not really God; maybe it’s a super intelligent computer that is working in a completely determinate way.

Question from the audience: How important is empathy in law? Human Rights Watch just released a report about fully autonomous robot military drones that actually make all the targeting decisions that humans are currently making. This seems like a pretty ominous development.

Peter Thiel: Briefly recapping my thesis here should help us approach this question. My general bias is pro-computer, pro-AI, and pro-transparency, with reservations here and there. In the main, our legal system deviates from a rational system not in a superrational way—i.e. empathy leading to otherwise unobtainable truth—but rather in subrational way, where people are angry and act unjustly.

If you could have a system with zero empathy but also zero hate, that would probably be a large improvement over the status quo.

Regarding your example of automated killing in war contexts—that’s certainly very jarring. One can see a lot of problems with it. But the fundamental problem is not the machines are killing people without feeling bad about it. The problem is simply that they’re killing people.

Question from the audience: But Human Rights Watch says that the more automated machines will kill more people, because human soldiers and operates sometimes hold back because of emotion and empathy.

Peter Thiel: This sort of opens up a counterfactual debate. Theory would seem to go the other way: more precision in war, such that you kill only actual combatants, results in fewer deaths because there is less collateral damage. Think of the carnage on the front in World War I. Suppose you have 1,000 people getting killed each day, and this continues for 3-4 years straight. Shouldn’t somebody have figured out that this was a bad idea? Why didn’t the people running things put an end to this? These questions suggest that our normal intuitions about war are completely wrong. If you heard that a child was being killed in an adjacent room, your instinct would be to run over and try to stop it. But in war, when many thousands are being killed… well, one sort of wonders how this is even possible. Clearly the normal intuitions don’t work.

One theory is that the politicians and generals who are running things are actually sociopaths who don’t care about the human costs. As we understand more neurobiology, it may come to light that we have a political system in which the people who want and manage to get power are, in fact, sociopaths. You can also get here with a simple syllogism: There’s not much empathy in war. That’s strange because most people have empathy. So it’s very possible that the people making war do not.

So, while it’s obvious that drones killing people in war is very disturbing, it may just be the war that is disturbing, and our intuitions are throwing us off.

Ref: Peter Thiel on The Future of Legal Technology, Notes Essay – Blake Masters

Game of Drones

And although Mr Karem was not involved in the decision to arm the Predator, he has no objection to the use of drones as weapons platforms. “At least people are now working on how to kill the minimum number of people on the other side,” he says. “The missiles on the Predator are way too capable. Weapons for UAVs are going to get smaller and smaller to avoid collateral damage.” – Abe Karim, The drone father

Automated Blackhawk

Importantly, the RASCAL was operating on the fly. “No prior knowledge of the terrain was used,” Matthew Whalley, the Army’s Autonomous Rotorcraft Project lead, told Dailytech.

The RASCAL is just the latest for a military that is serious about removing its soldiers from harm’s way and letting robots do the dirty work. Already 30 percent of all US military aircraft are drones. And the navy’s X-47B robotic fighter is well on course to become the first autonomous air vehicle to take off and land on an aircraft carrier. Just days ago it completed its first catapult takeoff (from the ground).

Ref: Automated Blackhawk Helicopter Completes First Flight Test – SingularityHub

A Human Will Always Decide When a Robot Kills You

Human rights groups and nervous citizens fear that technological advances in autonomy will slowly lead to the day when robots make that critical decision for themselves. But according to a new policy directive issued by a top Pentagon official, there shall be no SkyNet, thank you very much.

Here’s what happened while you were preparing for Thanksgiving: Deputy Defense Secretary Ashton Carter signed, on November 21, a series of instructions to “minimize the probability and consequences of failures” in autonomous or semi-autonomous armed robots “that could lead to unintended engagements,” starting at the design stage

Ref: Pentagon: A Human Will Always Decide When a Robot Kills You – Wired

Ref: Autonomy in Weapon Systems – Departement of Defense (via Cryptome)