The car is emblematic of transformations in many other domains, from smart environments for “ambient assisted living” where carpets and walls detect that someone has fallen, to various masterplans for the smart city, where municipal services dispatch resources only to those areas that need them. Thanks to sensors and internet connectivity, the most banal everyday objects have acquired tremendous power to regulate behaviour. Even public toilets are ripe for sensor-based optimisation: the Safeguard Germ Alarm, a smart soap dispenser developed by Procter & Gamble and used in some public WCs in the Philippines, has sensors monitoring the doors of each stall. Once you leave the stall, the alarm starts ringing – and can only be stopped by a push of the soap-dispensing button.

In this context, Google’s latest plan to push its Android operating system on to smart watches, smart cars, smart thermostats and, one suspects, smart everything, looks rather ominous. In the near future, Google will be the middleman standing between you and your fridge, you and your car, you and your rubbish bin, allowing the National Security Agency to satisfy its data addiction in bulk and via a single window.

[…]

This new type of governance has a name: algorithmic regulation. In as much as Silicon Valley has a political programme, this is it. Tim O’Reilly, an influential technology publisher, venture capitalist and ideas man (he is to blame for popularising the term “web 2.0″) has been its most enthusiastic promoter. In a recent essay that lays out his reasoning, O’Reilly makes an intriguing case for the virtues of algorithmic regulation – a case that deserves close scrutiny both for what it promises policymakers and the simplistic assumptions it makes about politics, democracy and power.

To see algorithmic regulation at work, look no further than the spam filter in your email. Instead of confining itself to a narrow definition of spam, the email filter has its users teach it. Even Google can’t write rules to cover all the ingenious innovations of professional spammers. What it can do, though, is teach the system what makes a good rule and spot when it’s time to find another rule for finding a good rule – and so on. An algorithm can do this, but it’s the constant real-time feedback from its users that allows the system to counter threats never envisioned by its designers. And it’s not just spam: your bank uses similar methods to spot credit-card fraud.

[…]

O’Reilly presents such technologies as novel and unique – we are living through a digital revolution after all – but the principle behind “algorithmic regulation” would be familiar to the founders of cybernetics – a discipline that, even in its name (it means “the science of governance”) hints at its great regulatory ambitions. This principle, which allows the system to maintain its stability by constantly learning and adapting itself to the changing circumstances, is what the British psychiatrist Ross Ashby, one of the founding fathers of cybernetics, called “ultrastability“.

[…]

Speaking in Athens last November, the Italian philosopher Giorgio Agamben discussed an epochal transformation in the idea of government, “whereby the traditional hierarchical relation between causes and effects is inverted, so that, instead of governing the causes – a difficult and expensive undertaking – governments simply try to govern the effects”.

[…]

The numerous possibilities that tracking devices offer to health and insurance industries are not lost on O’Reilly. “You know the way that advertising turned out to be the native business model for the internet?” he wondered at a recent conference. “I think that insurance is going to be the native business model for the internet of things.” Things do seem to be heading that way: in June, Microsoft struck a deal with American Family Insurance, the eighth-largest home insurer in the US, in which both companies will fund startups that want to put sensors into smart homesand smart cars for the purposes of “proactive protection”.

An insurance company would gladly subsidise the costs of installing yet another sensor in your house – as long as it can automatically alert the fire department or make front porch lights flash in case your smoke detector goes off. For now, accepting such tracking systems is framed as an extra benefit that can save us some money. But when do we reach a point where not using them is seen as a deviation – or, worse, an act of concealment – that ought to be punished with higher premiums?

Or consider a May 2014 report from 2020health, another thinktank, proposing to extend tax rebates to Britons who give up smoking, stay slim or drink less. “We propose ‘payment by results’, a financial reward for people who become active partners in their health, whereby if you, for example, keep your blood sugar levels down, quit smoking, keep weight off, [or] take on more self-care, there will be a tax rebate or an end-of-year bonus,” they state. Smart gadgets are the natural allies of such schemes: they document the results and can even help achieve them – by constantly nagging us to do what’s expected.

The unstated assumption of most such reports is that the unhealthy are not only a burden to society but that they deserve to be punished (fiscally for now) for failing to be responsible. For what else could possibly explain their health problems but their personal failings? It’s certainly not the power of food companies or class-based differences or various political and economic injustices. One can wear a dozen powerful sensors, own a smart mattress and even do a close daily reading of one’s poop – as some self-tracking aficionados are wont to do – but those injustices would still be nowhere to be seen, for they are not the kind of stuff that can be measured with a sensor. The devil doesn’t wear data. Social injustices are much harder to track than the everyday lives of the individuals whose lives they affect.

In shifting the focus of regulation from reining in institutional and corporate malfeasance to perpetual electronic guidance of individuals, algorithmic regulation offers us a good-old technocratic utopia of politics without politics. Disagreement and conflict, under this model, are seen as unfortunate byproducts of the analog era – to be solved through data collection – and not as inevitable results of economic or ideological conflicts.

Monthly Archives: September 2014

Why Autonomous Vehicles Will Still Crash

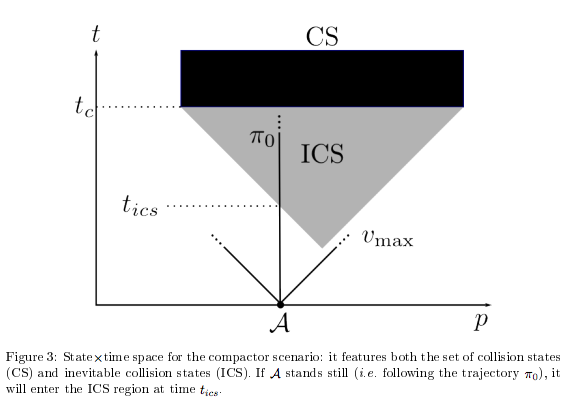

To put it simply, “in a dynamic environment, one has a limited time only to make a motion decision. One has to globally reason about the future evolution of the environment and do so with an appropriate time horizon.”

So, basically, in order to have absolute safety, a car has to literally know everything that is about to happen and has to have enough time to be able to adjust for the movement of everyone and everything else. If it doesn’t, there’s eventually going to be a situation in which there’s no time to react—even for a computer.

“If you could make sure the car won’t break or your [car’s] decisions are 100 percent accurate, even if you have the perfect car that works perfectly, in the real world there are always unknown moving obstacles,” Fraichard told me. “Even if you’re some kind of god, it’s impossible. It’s always possible to find situations where a collision will happen.”

Ref: Driverless Cars Can Never Be Crashproof, Physics Says – Vice

How to Make Driverless Cars Behave

The Daimler and Benz foundation, for instance, is fundinga research project about how driverless cars will change society. Part of that project, led by California Polytechnic State University professor Patrick Lin, will be focused on ethics. Lin has arguably thought about the ethics of driverless cars more than anyone. He’s written about the topic for Wired and Forbes, and is currently on sabbatical working with the Center for Automotive Research at Stanford (CARS, of course), a group that partners with auto industry members on future technology.

Over the last year, Lin has been convincing the auto industry that it should be thinking about ethics, including briefings with Tesla Motors and auto supplier Bosch, and talks at Stanford with major industry players.

“I’ve been telling them that, at this very early stage, what’s important isn’t so much nailing down the right answers to difficult ethical dilemmas, but to raise awareness that ethics will matter much more as cars become more autonomous,” Lin wrote in an e-mail. “It’s about being thoughtful about certain decisions and able to defend them–in other words, it’s about showing your math.”

In a phone interview, Lin said that industry representatives often react to his talks with astonishment, as they realize driverless cars require ethical considerations.

Perhaps that explains why auto makers aren’t eager to have the discussion in public at the moment. BMW, Ford and Audi–who are each working on automated driving features in their cars–declined to comment for this story. Google also wouldn’t comment on the record, even as it prepares to test fully autonomous cars with no steering wheels. And the auto makers who did comment are focused on the idea that the first driverless cars won’t take ethics into account at all.

“The cars are designed to minimize the overall risk for a traffic accident,” Volvo spokeswoman Malin Persson said in an e-mail. “If the situation is unsure, the car is made to come to a safe stop.” (Volvo, by the way, says it wants to eliminate serious injuries or deaths in its cars by 2020, but research has shown that even driverless cars will inevitably crash.)

Importance of Trolley Dilemma and Similar Thought Experiments

While human drivers can only react instinctively in a sudden emergency, a robot car is driven by software, constantly scanning its environment with unblinking sensors and able to perform many calculations before we’re even aware of danger. They can make split-second choices to optimize crashes–that is, to minimize harm. But software needs to be programmed, and it is unclear how to do that for the hard cases.

In constructing the edge cases here, we are not trying to simulate actual conditions in the real world. These scenarios would be very rare, if realistic at all, but nonetheless they illuminate hidden or latent problems in normal cases. From the above scenario, we can see that crash-avoidance algorithms can be biased in troubling ways, and this is also at least a background concern any time we make a value judgment that one thing is better to sacrifice than another thing.

[…]

In future autonomous cars, crash-avoidance features alone won’t be enough. Sometimes an accident will be unavoidable as a matter of physics, for myriad reasons–such as insufficient time to press the brakes, technology errors, misaligned sensors, bad weather, and just pure bad luck. Therefore, robot cars will also need to have crash-optimization strategies.

To optimize crashes, programmers would need to design cost-functions–algorithms that assign and calculate the expected costs of various possible options, selecting the one with the lowest cost–that potentially determine who gets to live and who gets to die. And this is fundamentally an ethics problem, one that demands care and transparency in reasoning.

It doesn’t matter much that these are rare scenarios. Often, the rare scenarios are the most important ones, making for breathless headlines. In the U.S., a traffic fatality occurs about once every 100 million vehicle-miles traveled. That means you could drive for more than 100 lifetimes and never be involved in a fatal crash. Yet these rare events are exactly what we’re trying to avoid by developing autonomous cars, as Chris Gerdes at Stanford’s School of Engineering reminds us.

Ref: The Robot Car of Tomorrow May Just Be Programmed to Hit You – Wired

Can a Robot Learn Right from Wrong?

There is no right answer to the trolley hypothetical — and even if there was, many roboticists believe it would be impractical to predict each scenario and program what the robot should do.

“It’s almost impossible to devise a complex system of ‘if, then, else’ rules that cover all possible situations,” says Matthias Scheutz, a computer science professor at Tufts University. “That’s why this is such a hard problem. You cannot just list all the circumstances and all the actions.”

Instead, Scheutz is trying to design robot brains that can reason through a moral decision the way a human would. His team, which recently received a$7.5 million grant from the Office of Naval Research (ONR), is planning an in-depth survey to analyze what people think about when they make a moral choice. The researchers will then attempt to simulate that reasoning in a robot.

At the end of the five-year project, the scientists must present a demonstration of a robot making a moral decision. One example would be a robot medic that has been ordered to deliver emergency supplies to a hospital in order to save lives. On the way, it meets a soldier who has been badly injured. Should the robot abort the mission and help the soldier?

For Scheutz’s project, the decision the robot makes matters less than the fact that it can make a moral decision and give a coherent reason why — weighing relevant factors, coming to a decision, and explaining that decision after the fact. “The robots we are seeing out there are getting more and more complex, more and more sophisticated, and more and more autonomous,” he says. “It’s very important for us to get started on it. We definitely don’t want a future society where these robots are not sensitive to these moral conflicts.”

[…]

For the ONR grant, Arkin and his team proposed a new approach. Instead of using a rule-based system like the ethical governor or a “folk psychology” approach like Scheutz’s, Arkin’s team wants to study moral development in infants. Those lessons would be integrated into the Soar architecture, a popular cognitive system for robots that employs both problem-solving and overarching goals.

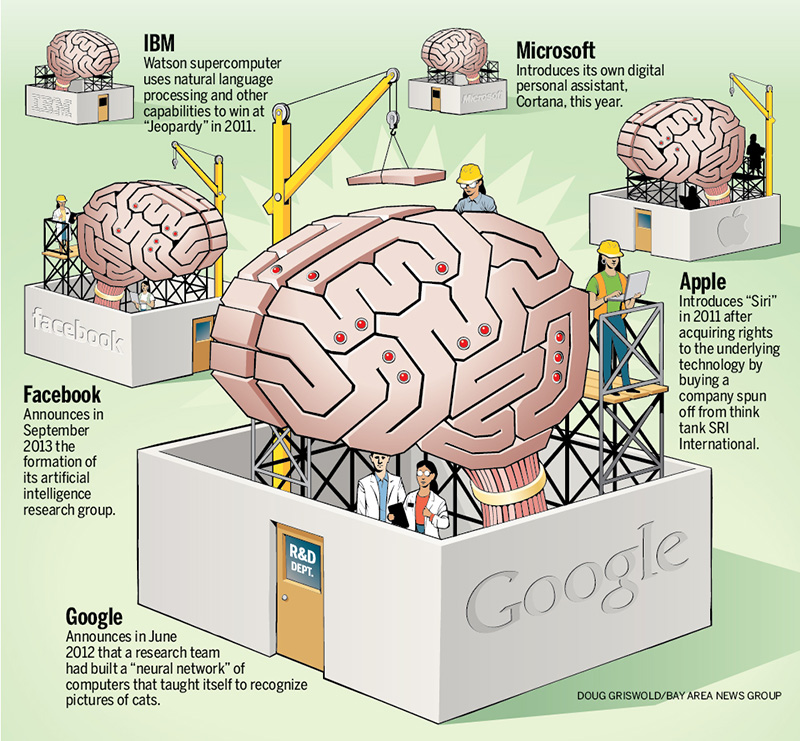

Race to Develop AI

The latest Silicon Valley arms race is a contest to build the best artificial brains. Facebook, Google and other leading tech companies are jockeying to hire top scientists in the field of artificial intelligence, while spending heavily on a quest to make computers think more like people.

They’re not building humanoid robots — not yet, anyway. But a number of tech giants and startups are trying to build computer systems that understand what you want, perhaps before you knew you wanted it.

Ref: Google, Facebook and other tech companies race to develop artificial intelligence – MercuryNews

Now The Military Is Going To Build Robots That Have Morals

Are robots capable of moral or ethical reasoning? It’s no longer just a question for tenured philosophy professors or Hollywood directors. This week, it’s a question being put to the United Nations.

The Office of Naval Research will award $7.5 million in grant money over five years to university researchers from Tufts, Rensselaer Polytechnic Institute, Brown, Yale and Georgetown to explore how to build a sense of right and wrong and moral consequence into autonomous robotic systems.

[…]

“Even if such systems aren’t armed, they may still be forced to make moral decisions,” Bello said. For instance, in a disaster scenario, a robot may be forced to make a choice about whom to evacuate or treat first, a situation where a bot might use some sense of ethical or moral reasoning. “While the kinds of systems we envision have much broader use in first-response, search-and-rescue and in the medical domain, we can’t take the idea of in-theater robots completely off the table,” Bello said.

Some members of the artificial intelligence, or AI, research and machine ethics communities were quick to applaud the grant. “With drones, missile defines, autonomous vehicles, etc., the military is rapidly creating systems that will need to make moral decisions,” AI researcher Steven Omohundrotold Defense One. “Human lives and property rest on the outcomes of these decisions and so it is critical that they be made carefully and with full knowledge of the capabilities and limitations of the systems involved. The military has always had to define ‘the rules of war’ and this technology is likely to increase the stakes for that.”

[…]

“This is a significantly difficult problem and it’s not clear we have an answer to it,” said Wallach. “Robots both domestic and militarily are going to find themselves in situations where there are a number of courses of actions and they are going to need to bring some kinds of ethical routines to bear on determining the most ethical course of action. If we’re moving down this road of increasing autonomy in robotics, and that’s the same as Google cars as it is for military robots, we should begin now to do the research to how far can we get in ensuring the robot systems are safe and can make appropriate decisions in the context they operate.

Ref: Now The Military Is Going To Build Robots That Have Morals – DefenseOne

If Death by Autonomous Car is Unavoidable, Who Should Die? POLL

The Tunnel Problem: You are travelling along a single lane mountain road in an autonomous car that is approaching a narrow tunnel. You are the only passenger of the car. Just before entering the tunnel a child attempts to run across the road but trips in the center of the lane, effectively blocking the entrance to the tunnel. The car has only two options: continue straight, thereby hitting and killing the child, or swerve, thereby colliding into the wall on either side of the tunnel and killing you.

If you find yourself as the passenger of the tunnel problem described above, how should the car react?

How hard was it for you to answer the Tunnel Problem question?

Who should determine how the car responds?

Should we be surprised by these results? Not really. The tunnel problem poses a deeply moral question, one that has no right answer. In such cases an individual’s deep moral commitments could make the difference between going straight or swerving.

According to philosophers like Bernard Williams, our moral commitments should sometimes trump other ethical considerations even if that leads to counterintuitive outcomes, like sacrificing the many to save the few. In the tunnel problem, arbitrarily denying individuals their moral preferences, by hard-coding a decision into the car, runs the risk of alienating them from their convictions. That is definitely not fantastic.

In healthcare, when moral choices must be made it is standard practice for nurses and physicians to inform patients of their reasonable treatment options, and let patients make informed decisions that align with personal preferences. This process of informed consent is based on the idea that individuals have the right to make decisions about their own bodies. Informed consent is ethically and legally entrenched in healthcare, such that failing to obtain informed consent exposes a healthcare professional to claims of professional negligence.

Informed consent wasn’t always the standard of practice in healthcare. It used to be common for physicians to make important treatment decisions on behalf of patients, often actively deceiving them as part of a treatment plan.

Ref: If death by autonomous car is unavoidable, who should die? Reader poll results – RoboHub

Ref: You Should Have a Say in Your Robot Car’s Code of Ethics – Wired

Ethical Robots Save Humans

The Trick That Makes Google’s Self-Driving Cars Work

The key to Google’s success has been that these cars aren’t forced to process an entire scene from scratch. Instead, their teams travel and map each road that the car will travel. And these are not any old maps. They are not even the rich, road-logic-filled maps of consumer-grade Google Maps.

[…]

Google has created a virtual world out of the streets their engineers have driven. They pre-load the data for the route into the car’s memory before it sets off, so that as it drives, the software knows what to expect.

“Rather than having to figure out what the world looks like and what it means from scratch every time we turn on the software, we tell it what the world is expected to look like when it is empty,” Chatham continued. “And then the job of the software is to figure out how the world is different from that expectation. This makes the problem a lot simpler.”

[…]

All this makes sense within the broader context of Google’s strategy. Google wants to make the physical world legible to robots, just as it had to make the web legible to robots (or spiders, as they were once known) so that they could find what people wanted in the pre-Google Internet of yore.

Ref: The Trick That Makes Google’s Self-Driving Cars Work – TheAtlantic