[…]

That’s how this puzzle relates to the non-identity problem posed by Oxford philosopher Derek Parfit in 1984. Suppose we face a policy choice of either depleting some natural resource or conserving it. By depleting it, we might raise the quality of life for people who currently exist, but we would decrease the quality of life for future generations; they would no longer have access to the same resource.

Say that the best we could do is make robot cars reduce traffic fatalities by 1,000 lives. That’s still pretty good. But if they did so by saving all 32,000 would-be victims while causing 31,000 entirely new victims, we wouldn’t be so quick to accept this trade — even if there’s a net savings of lives.

[…]

With this new set of victims, however, are we violating their right not to be killed? Not necessarily. If we view the right not to be killed as the right not to be an accident victim, well, no one has that right to begin with. We’re surrounded by both good luck and bad luck: accidents happen. (Even deontological – duty-based — or Kantian ethics could see this shift in the victim class as morally permissible given a non-violation of rights or duties, in addition to the consequentialist reasons based on numbers.)

[…]

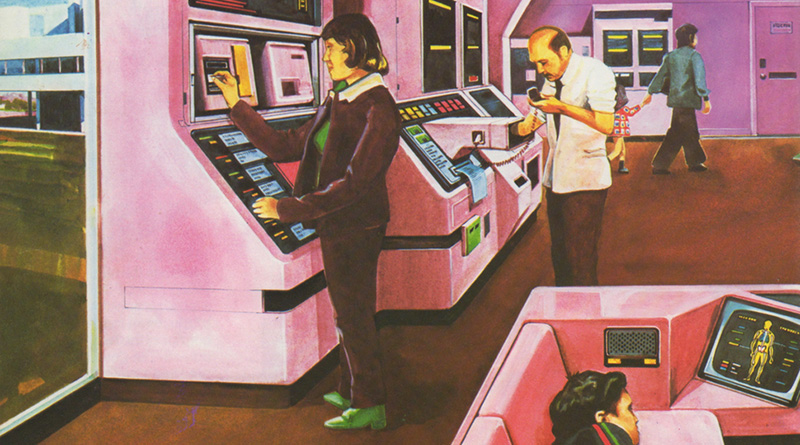

Ethical dilemmas with robot cars aren’t just theoretical, and many new applied problems could arise: emergencies, abuse, theft, equipment failure, manual overrides, and many more that represent the spectrum of scenarios drivers currently face every day.

One of the most popular examples is the school-bus variant of the classic trolley problem in philosophy: On a narrow road, your robotic car detects an imminent head-on crash with a non-robotic vehicle — a school bus full of kids, or perhaps a carload of teenagers bent on playing “chicken” with you, knowing that your car is programmed to avoid crashes. Your car, naturally, swerves to avoid the crash, sending it into a ditch or a tree and killing you in the process.

At least with the bus, this is probably the right thing to do: to sacrifice yourself to save 30 or so schoolchildren. The automated car was stuck in a no-win situation and chose the lesser evil; it couldn’t plot a better solution than a human could.

But consider this: Do we now need a peek under the algorithmic hood before we purchase or ride in a robot car? Should the car’s crash-avoidance feature, and possible exploitations of it, be something explicitly disclosed to owners and their passengers — or even signaled to nearby pedestrians? Shouldn’t informed consent be required to operate or ride in something that may purposely cause our own deaths?

It’s one thing when you, the driver, makes a choice to sacrifice yourself. But it’s quite another for a machine to make that decision for you involuntarily.