Monthly Archives: April 2013

Behavio is Now Part of Google

Instead of intentional online connections, […] Behavio, looks at how peoples’ location, network of phone contacts, physical proximity, and movement throughout the day can help us predict range of behaviors — anything from fitness to app downloads to mass protests.

—

“We are very excited to announce that the Behavio team is now a part of Google!” Behavio announced on its website today.

“At Behavio, we have always been passionate about helping people better understand the world around them,” the post continued. “We believe that our digital experiences should be better connected with the way we experience the world, and we couldn’t be happier to be able to continue building out our vision within Google.”

Ref: Google Acquires Social Prediction Startup Behavio – MobileMarketingWatch

Lawfare T2000

Pull the Plug on Killer Robots

Campaign to Stop Killer Robots

Over the past decade, the expanded use of unmanned armed vehicles has dramatically changed warfare, bringing new humanitarian and legal challenges. Now rapid advances in technology are resulting in efforts to develop fully autonomous weapons. These robotic weapons would be able to choose and fire on targets on their own, without any human intervention. This capability would pose a fundamental challenge to the protection of civilians and to compliance with international human rights and humanitarian law.

Several nations with high-tech militaries, including China, Israel, Russia, the United Kingdom, and the United States, are moving toward systems that would give greater combat autonomy to machines. If one or more chooses to deploy fully autonomous weapons, a large step beyond remote-controlled armed drones, others may feel compelled to abandon policies of restraint, leading to a robotic arms race. Agreement is needed now to establish controls on these weapons before investments, technological momentum, and new military doctrine make it difficult to change course.

Allowing life or death decisions to be made by machines crosses a fundamental moral line. Autonomous robots would lack human judgment and the ability to understand context. These qualities are necessary to make complex ethical choices on a dynamic battlefield, to distinguish adequately between soldiers and civilians, and to evaluate the proportionality of an attack. As a result fully autonomous weapons would not meet the requirements of the laws of war.

Replacing human troops with machines could make the decision to go to war easier, which would shift the burden of armed conflict further onto civilians. The use of fully autonomous weapons would create an accountability gap as there is no clarity on who would be legally responsible for a robot’s actions: the commander, programmer, manufacturer, or robot itself? Without accountability, these parties would have less incentive to ensure robots did not endanger civilians and victims would be left unsatisfied that someone was punished for the harm they experienced.

Hybrid Age

Let us begin with technology’s growing ability to manipulate how much information we have about the world around us. Google glasses and soon pixelated contact lenses will allow us to augment reality with a layer of data. Future versions may provide a more intrusive view, such as sensing your vital signs and stress level. Such augmentation has the potential to empower us with a feeling of enhanced access to “reality.” Whether or not this represents truth, however, is elusive. Consider the opposite of augmented reality: “deletive reality.” If pedestrians in New York or Mumbai don’t want to see homeless people, they could delete them from view in real-time. This not only diminishes the diversity of reality; it also blocks us from developing empathy.

[…]

The combination of cloud-based data, devices, and software that allow us to search and share, and artificial intelligence capable of semantic understanding heralds the rise of a collective intelligence. The Internet, Jeffrey Stibel argues, is not just becoming like a brain. It is a brain: It ingests data, processes them, and “provides answers without knowing questions.” As our cognitive processes are increasingly shared with devices, networks, and the physical environment, our sense of self increasingly morphs to become the sum of our connections and relationships. Rather than one single identity, we each have a personal identity ecologycombining our real and virtual selves and our semantic links floating in the global mind (“Noosphere”). Google’s Sergey Brin calls this having “the entire world’s knowledge connected directly to your mind.”

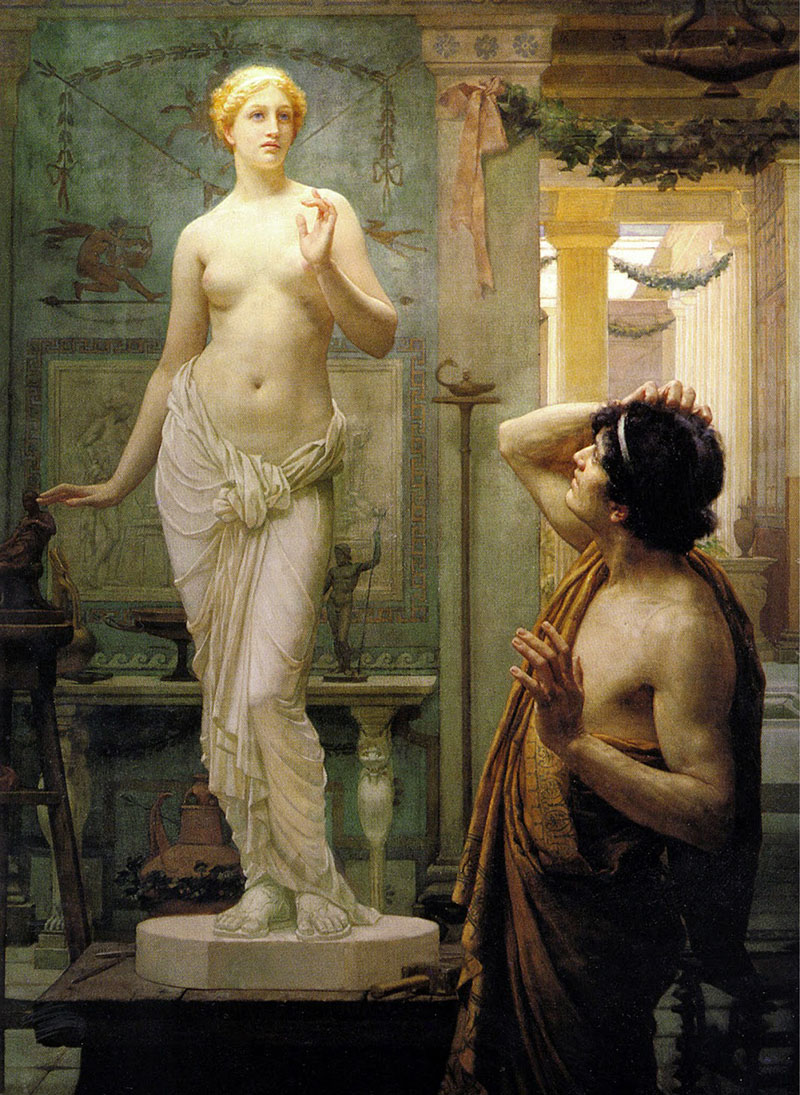

Pygmalion <-> AI

Artificial intelligence is arguably the most useless technology that humans have ever aspired to possess. Actually, let me clarify. It would be useful to have a robot that could make independent decisions while, say, exploring a distant planet, or defusing a bomb. But the ultimate aspiration of AI was never just to add autonomy to a robot’s operating system. The idea wasn’t to enable a computer to search data faster by ‘understanding patterns’, or communicate with its human masters via natural language. The dream of AI was — and is — to create a machine that is conscious. AI means building a mechanical human being. And this goal, as supposedly rational technological projects go, is deeply strange.

[…]

Technology is a cultural phenomenon, and as such it is molded by our cultural values. We prefer good health to sickness so we develop medicine. We value wealth and freedom over poverty and bondage, so we invent markets and the multitudinous thingummies of comfort. We are curious, so we aim for the stars. Yet when it comes to creating conscious simulacra of ourselves, what exactly is our motive? What deep emotions drive us to imagine, and strive to create, machines in our own image? If it is not fear, or want, or curiosity, then what is it? Are we indulging in abject narcissism? Are we being unforgivably vain? Or could it be because of love?

But machines were objects of erotic speculation long before Turing entered the scene. Western literature, ancient and modern, is strewn with mechanical lovers. Consider Pygmalion, the Cypriot sculptor and favorite of Aphrodite. Ovid, in his Metamorphoses, describes him carving a perfect woman out of ivory. Her name is Galatea and she’s so lifelike that Pygmalion immediately falls in love with her. He prays to Aphrodite to make the statue come to life. The love goddess already knows a thing or two about beautiful, non-biological maidens: her husband Hephaestus has constructed several good-looking fembots to lend a hand in his Olympian workshop. She grants Pygmalion’s wish; Pygmalion kisses his perfect creation, and Galatea becomes a real woman. They live happily ever after.

[…]

As the 20th century came into its own, Pygmalion collided with modernity and its various theories about the human mind: psychoanalysis, behaviourist psychology, the tabula rasa whereby one writes the algorithm of personhood upon a clean slate. Galatea becomes Maria, the robot in Fritz Lang’s epic film Metropolis (1927); she is less innocent now, a temptress performing the manic and deeply erotic dance of Babylon in front of goggling men.

To Save Everything, Click Here

Too much assault and battery creates a more serious problem: wrongful appropriation, as Morozov tends to borrow heavily, without attribution, from those he attacks. His critique of Google and other firms engaged in “algorithmic gatekeeping”is basically taken from Lessig’s first book, “Code and Other Laws of Cyberspace,” in which Lessig argued that technology is necessarily ideological and that choices embodied in code, unlike law, are dangerously insulated from political debate. Morozov presents these ideas as his own and, instead of crediting Lessig, bludgeons him repeatedly. Similarly, Morozov warns readers of the dangers of excessively perfect technologies as if Jonathan Zittrain hadn’t been saying the same thing for the past 10 years. His failure to credit his targets gives the misimpression that Morozov figured it all out himself and that everyone else is an idiot.

Answers from Evgeny Morozov: Recycle the Cycle I, Recycle the Cycle II

Ref: Book review: ‘To Save Everything, Click Here’ by Evgeny Morozov – Washington Post

Google Wants to Build the Star Trek Computer

So I went to Google to interview some of the people who are working on its search engine. And what I heard floored me. “The Star Trek computer is not just a metaphor that we use to explain to others what we’re building,” Singhal told me. “It is the ideal that we’re aiming to build—the ideal version done realistically.” He added that the search team does refer to Star Trek internally when they’re discussing how to improve the search engine. “It comes up often,” Singhal said. “For instance, we might say, ‘Captain Kirk never pulled out a keyboard to ask a question.’ So in that way it becomes one of the design principles—we see that because the Star Trek computer actively relies on speech, if we want to do that we need to work to push the barrier of speech recognition and machine understanding.”

[…]What does it mean that Google really is trying to build the Star Trek computer? I take it as a cue to stop thinking about Google as a “search engine.” That term conjures a staid image: a small box on a page in which you type keywords. A search engine has several key problems. First, most of the time it doesn’t give you an answer—it gives you links to an answer. Second, it doesn’t understand natural language; when you search, you’ve got to adopt the search engine’s curious, keyword-laden patois. Third, and perhaps most importantly, a search engine needs for you to ask it questions—it doesn’t pipe in with information when you need it, without your having to ask.

The Star Trek computer worked completely differently. It understood language and was conversational, it gave you answers instead of references to answers, and it anticipated your needs. “It was the perfect search engine,” Singhal said. “You could ask it a question and it would tell you exactly the right answer, one right answer—and sometimes it would tell you things you needed to know in advance, before you could ask it.”

Machine That Predicts Future

An Iranian scientist has claimed to have invented a ‘time machine’ that can predict the future of any individual with a 98 per cent accuracy.

Serial inventor Ali Razeghi registered “The Aryayek Time Traveling Machine” with Iran’s state-run Centre for Strategic Inventions, The Telegraph reported.

According to a Fars news agency report, Mr Razeghi, 27, claims the machine uses algorithms to produce a print-out of the details of any individual’s life between five and eight years into their future.

Mr Razeghi, quoted in the Telegraph, said: “My invention easily fits into the size of a personal computer case and can predict details of the next 5-8 years of the life of its users. It will not take you into the future, it will bring the future to you.”

Razeghi is the managing director of Iran’s Centre for Strategic Invention and reportedly has another 179 inventions registered in his name.

He claims the invention could help the government predict military conflict and forecast fluctuations in the value of foreign currencies and oil prices.

According to Mr Razeghi his latest project has been criticised by his friends and family for “trying to play God”.

Ref: Iranian scientist claims to have invented ‘Time Machine’ that can predict the future – The Independent (via DarkGovernment)