Monthly Archives: November 2014

Algorithms Are Great and All, But They Can Also Ruin Lives

On April 5, 2011, 41-year-old John Gass received a letter from the Massachusetts Registry of Motor Vehicles. The letter informed Gass that his driver’s license had been revoked and that he should stop driving, effective immediately. The only problem was that, as a conscientious driver who had not received so much as a traffic violation in years, Gass had no idea why it had been sent.

After several frantic phone calls, followed up by a hearing with Registry officials, he learned the reason: his image had been automatically flagged by a facial-recognition algorithm designed to scan through a database of millions of state driver’s licenses looking for potential criminal false identities. The algorithm had determined that Gass looked sufficiently like another Massachusetts driver that foul play was likely involved—and the automated letter from the Registry of Motor Vehicles was the end result.

The RMV itself was unsympathetic, claiming that it was the accused individual’s “burden” to clear his or her name in the event of any mistakes, and arguing that the pros of protecting the public far outweighed the inconvenience to the wrongly targeted few.

John Gass is hardly alone in being a victim of algorithms gone awry. In 2007, a glitch in the California Department of Health Services’ new automated computer system terminated the benefits of thousands of low-income seniors and people with disabilities. Without their premiums paid, Medicare canceled those citizens’ health care coverage.

[…]

Equally alarming is the possibility that an algorithm may falsely profile an individual as a terrorist: a fate that befalls roughly 1,500 unlucky airline travelers each week. Those fingered in the past as the result of data-matching errors include former Army majors, a four-year-old boy, and an American Airlines pilot—who was detained 80 times over the course of a single year.

[…]

“We are all so scared of human bias and inconsistency,” says Danielle Citron, professor of law at the University of Maryland. “At the same time, we are overconfident about what it is that computers can do.”

The mistake, Citron suggests, is that we “trust algorithms, because we think of them as objective, whereas the reality is that humans craft those algorithms and can embed in them all sorts of biases and perspectives.” To put it another way, a computer algorithm might be unbiased in its execution, but, as noted, this does not mean that there is not bias encoded within it.

Ref: Algorithms Are Great and All, But They Can Also Ruin Lives – Wired

When Will We Let Go and Let Google Drive Us?

According to Templeton, regulators and policymakers are proving more open to the idea than expected—a number of US states have okayed early driverless cars for public experimentation, along with Singapore, India, Israel, and Japan—but earning the general public’s trust may be a more difficult battle to win.

No matter how many fewer accidents occur due to driverless cars, there may well be a threshold past which we still irrationally choose human drivers over them. That is, we may hold robots to a much higher standard than humans.

This higher standard comes at a price. “People don’t want to be killed by robots,” Templeton said. “They want to be killed by drunks.”

It’s an interesting point—assuming the accident rate is nonzero (and it will be), how many accidents are we willing to tolerate in driverless cars, and is that number significantly lower than the number we’re willing to tolerate with human drivers?

Let’s say robot cars are shown to reduce accidents by 20%. They could potentially prevent some 240,000 accidents (using Templeton’s global number). That’s a big deal. And yet if (fully) employed, they would still cause nearly a million accidents a year. Who would trust them? And at what point does that trust kick in? How close to zero accidents does it have to get?

And it may turn out that the root of the problem lies not with the technology but us.

Ref: Summit Europe: When Will We Let Go and Let Google Drive Us? – SingularityHub

Killer Robots: More talks in 2015?

Government delegates attending next week’s annual meeting of the Convention on Conventional Weapons (CCW) at the United Nations in Geneva will decide whether to continue in 2015 with multilateral talks on questions relating to “lethal autonomous weapons systems.”

Ambassador Simon-Michel chaired the 2014 experts meeting on lethal autonomous weapons systems and has identified several areas for further study or deliberation in his chair’s report, including:

- The notion of meaningful human control of autonomous weapons systems;

- The key ethical question of delegating the right to decide on life and death to a machine;

- The “various” views on the possibility that autonomous weapons would being able to comply with rules of international law and “different” views on the adequacy of existing law;

- Weapons reviews, including Article 36 of Additional Protocol I (1977) to the 1949 Geneva Conventions;

- The accountability gap, including issue of responsibility at the State level or at an individual level;

- Whether the weapons could change the threshold of use of force

Ref: Decision Time More Talks in 2015? – Campaign to Stop Killer Robots

Killer Robots

One of them is the Skunk, designed for crowd control. It can douse demonstrators with teargas.

“There could be a dignity issue here; being herded by drones would be like herding cattle,” he said.

But at least drones have a human at the controls.

“[Otherwise] you are giving [the power of life and death] to a machine,” said Heyns. “Normally, there is a human being to hold accountable.

“If it’s a robot, you can put it in jail until its batteries run flat but that’s not much of a punishment.”

Heyns said the advent of the new generation of weapons made it necessary for laws to be introduced that would prohibit the use of systems that could be operated without a significant level of human control.

“Technology is a tool and it should remain a tool, but it is a dangerous tool and should be held under scrutiny. We need to try to define the elements of needful human control,” he said.

Several organisations have voiced concerns about autonomous weapons. The Campaign to Stop Killer Robots wants a ban on fully autonomous weapons.

Ref: Stop Killer Robots While we Can – Time Live

Artificial General Intelligence (AGI)

But how important is self-awareness, really, in creating an artificial mind on par with ours? According to quantum computing pioneer and Oxford physicist David Deutsch, not very.

In an excellent article in Aeon, Deutsch explores why artificial general intelligence (AGI) must be possible, but hasn’t yet been achieved. He calls it AGI to emphasize that he’s talking about a mind like ours, that can think and feel and reason about anything, as opposed to a complex computer program that’s very good at one or a few human-like tasks.

Simply put, his argument for why AGI is possible is this: Since our brains are made of matter, it must be possible, in principle at least, to recreate the functionality of our brains using another type of matter — specifically circuits.

As for Skynet’s self-awareness, Deutsch writes:

That’s just another philosophical misconception, sufficient in itself to block any viable approach to AGI. The fact is that present-day software developers could straightforwardly program a computer to have ‘self-awareness’ in the behavioural sense — for example, to pass the ‘mirror test’ of being able to use a mirror to infer facts about itself — if they wanted to. As far as I am aware, no one has done so, presumably because it is a fairly useless ability as well as a trivial one.

[…]

If we really want to create artificial intelligence, we have to understand what it is we’re trying to create. Deutsch persuasively argues that, as long as we’re focused on self-awareness, we miss out on understanding how our brains actually work, stunting our ability to create artificially intelligent machines.

What matters, Deutsch argues, is “the ability to create new explanations,” to generate theories about the world and all its particulars. In contrast with this, the idea that self-awareness — let alone real intelligence — will spontaneously emerge from a complex computer network is not just science fiction. It’s pure fantasy.

Ref: Terminator is Wrong about AI Self-Awareness – BusinessInsider

CyberSyn – The origin of the Big Data Nation

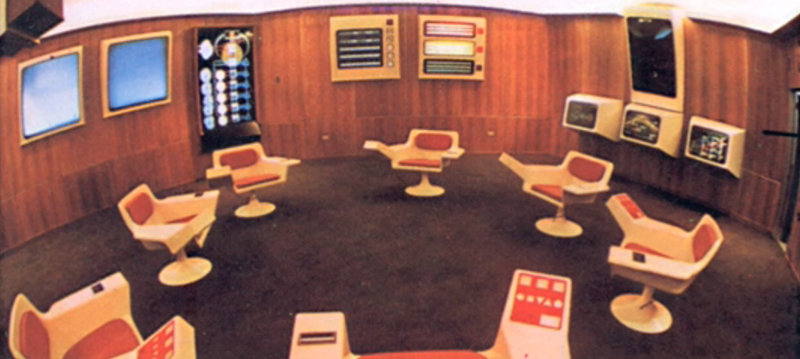

That was a challenge: the Chilean government was running low on cash and supplies; the United States, dismayed by Allende’s nationalization campaign, was doing its best to cut Chile off. And so a certain amount of improvisation was necessary. Four screens could show hundreds of pictures and figures at the touch of a button, delivering historical and statistical information about production—the Datafeed—but the screen displays had to be drawn (and redrawn) by hand, a job performed by four young female graphic designers. […] In addition to the Datafeed, there was a screen that simulated the future state of the Chilean economy under various conditions. Before you set prices, established production quotas, or shifted petroleum allocations, you could see how your decision would play out.

One wall was reserved for Project Cyberfolk, an ambitious effort to track the real-time happiness of the entire Chilean nation in response to decisions made in the op room. Beer built a device that would enable the country’s citizens, from their living rooms, to move a pointer on a voltmeter-like dial that indicated moods ranging from extreme unhappiness to complete bliss. The plan was to connect these devices to a network—it would ride on the existing TV networks—so that the total national happiness at any moment in time could be determined. The algedonic meter, as the device was called (from the Greekalgos, “pain,” and hedone, “pleasure”), would measure only raw pleasure-or-pain reactions to show whether government policies were working.

[…]

“The on-line control computer ought to be sensorily coupled to events in real time,” Beer argued in a 1964 lecture that presaged the arrival of smart, net-connected devices—the so-called Internet of Things. Given early notice, the workers could probably solve most of their own problems. Everyone would gain from computers: workers would enjoy more autonomy while managers would find the time for long-term planning. For Allende, this was good socialism. For Beer, this was good cybernetics.

[…]

Suppose that the state planners wanted the plant to expand its cooking capacity by twenty per cent. The modelling would determine whether the target was plausible. Say the existing boiler was used at ninety per cent of capacity, and increasing the amount of canned fruit would mean exceeding that capacity by fifty per cent. With these figures, you could generate a statistical profile for the boiler you’d need. Unrealistic production goals, overused resources, and unwise investment decisions could be dealt with quickly. “It is perfectly possible . . . to capture data at source in real time, and to process them instantly,” Beer later noted. “But we do not have the machinery for such instant data capture, nor do we have the sophisticated computer programs that would know what to do with such a plethora of information if we had it.”

Today, sensor-equipped boilers and tin cans report their data automatically, and in real time. And, just as Beer thought, data about our past behaviors can yield useful predictions. Amazon recently obtained a patent for “anticipatory shipping”—a technology for shipping products before orders have even been placed. Walmart has long known that sales of strawberry Pop-Tarts tend to skyrocket before hurricanes; in the spirit of computer-aided homeostasis, the company knows that it’s better to restock its shelves than to ask why.

[…]

Flowers suggests that real-time data analysis is allowing city agencies to operate in a cybernetic manner. Consider the allocation of building inspectors in a city like New York. If the city authorities know which buildings have caught fire in the past and if they have a deep profile for each such building—if, for example, they know that such buildings usually feature illegal conversions, and their owners are behind on paying property taxes or have a history of mortgage foreclosures—they can predict which buildings are likely to catch fire in the future and decide where inspectors should go first.

[…]

The aim is to replace rigid rules issued by out-of-touch politicians with fluid and personalized feedback loops generated by gadget-wielding customers. Reputation becomes the new regulation: why pass laws banning taxi-drivers from dumping sandwich wrappers on the back seat if the market can quickly punish such behavior with a one-star rating? It’s a far cry from Beer’s socialist utopia, but it relies on the same cybernetic principle: collect as much relevant data from as many sources as possible, analyze them in real time, and make an optimal decision based on the current circumstances rather than on some idealized projection.

[…]

It’s suggestive that Nest—the much admired smart thermostat, which senses whether you’re home and lets you adjust temperatures remotely—now belongs to Google, not Apple. Created by engineers who once worked on the iPod, it has a slick design, but most of its functionality (like its ability to learn and adjust to your favorite temperature by observing your behavior) comes from analyzing data, Google’s bread and butter. The proliferation of sensors with Internet connectivity provides a homeostatic solution to countless predicaments. Google Now, the popular smartphone app, can perpetually monitor us and (like Big Mother, rather than like Big Brother) nudge us to do the right thing—exercise, say, or take the umbrella.

Companies like Uber, meanwhile, insure that the market reaches a homeostatic equilibrium by monitoring supply and demand for transportation. Google recently acquired the manufacturer of a high-tech spoon—the rare gadget that is both smart and useful—to compensate for the purpose tremors that captivated Norbert Wiener. (There is also a smart fork that vibrates when you are eating too fast; “smart” is no guarantee against “dumb.”) The ubiquity of sensors in our cities can shift behavior: a new smart parking system in Madrid charges different rates depending on the year and the make of the car, punishing drivers of old, pollution-prone models. Helsinki’s transportation board has released an Uber-like app, which, instead of dispatching an individual car, coördinates multiple requests for nearby destinations, pools passengers, and allows them to share a much cheaper ride on a minibus.

[…]

For all its utopianism and scientism, its algedonic meters and hand-drawn graphs, Project Cybersyn got some aspects of its politics right: it started with the needs of the citizens and went from there. The problem with today’s digital utopianism is that it typically starts with a PowerPoint slide in a venture capitalist’s pitch deck. As citizens in an era of Datafeed, we still haven’t figured out how to manage our way to happiness. But there’s a lot of money to be made in selling us the dials.

Ref: The Planning Machine – The NewYorker