Monthly Archives: August 2014

Cycorp.

IBM’s Watson and Apple’s Siri stirred up a hunger and awareness throughout the U.S. for something like a Star Trek computer that really worked — an artificially intelligent system that could receive instructions in plain, spoken language, make the appropriate inferences, and carry out its instructions without needing to have millions and millions of subroutines hard-coded into it.

As we’ve established, that stuff is very hard. But Cycorp’s goal is to codify general human knowledge and common sense so that computers might make use of it.

Cycorp charged itself with figuring out the tens of millions of pieces of data we rely on as humans — the knowledge that helps us understand the world — and to represent them in a formal way that machines can use to reason. The company’s been working continuously since 1984 and next month marks its 30th anniversary.

“Many of the people are still here from 30 years ago — Mary Shepherd and I started [Cycorp] in August of 1984 and we’re both still working on it,” Lenat said. “It’s the most important project one could work on, which is why this is what we’re doing. It will amplify human intelligence.”

It’s only a slight stretch to say Cycorp is building a brain out of software, and they’re doing it from scratch.

“Any time you look at any kind of real life piece of text or utterance that one human wrote or said to another human, it’s filled with analogies, modal logic, belief, expectation, fear, nested modals, lots of variables and quantifiers,” Lenat said. “Everyone else is looking for a free-lunch way to finesse that. Shallow chatbots show a veneer of intelligence or statistical learning from large amounts of data. Amazon and Netflix recommend books and movies very well without understanding in any way what they’re doing or why someone might like something.

“It’s the difference between someone who understands what they’re doing and someone going through the motions of performing something.”

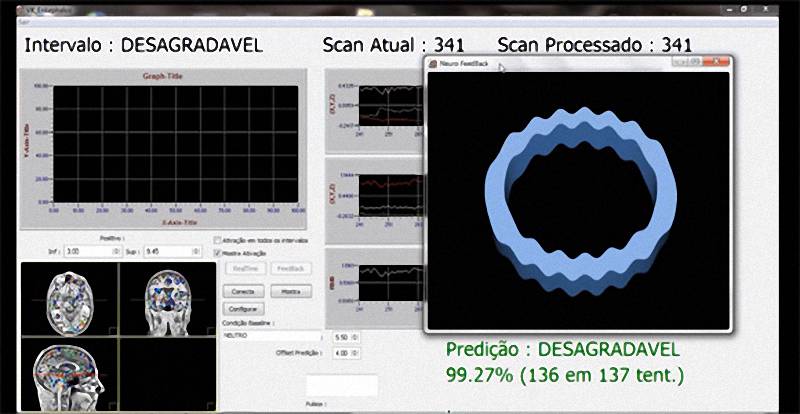

MACHINES TEACH HUMANS HOW TO FEEL USING NEUROFEEDBACK

Yet, some people, often as the result of traumatic experiences or neglect, don’t experience these fundamental social feelings normally. Could a machine teach them these quintessentially human responses? A thought-provoking Brazilian study recently published in PLoS One suggests it could.

Researchers at the D’Or Institute for Research and Education outside Rio de Janeiro, Brazil, performed functional MRI scans on healthy young adults while asking them to focus on past experience that epitomized feelings of non-sexual affection or pride of accomplishment. They set up a basic form of artificial intelligence to categorize, in real time, the fMRI readings as affection, pride or neither. They then showed the experiment group a graphic form of biofeedback to tell them whether their brain results were fully manifesting that feeling; the control group saw the meaningless graphics.

The results demonstrated that the machine-learning algorithms were able to detect complex emotions that stem from neurons in various parts of the cortex and sub-cortex, and the participants were able to hone their feelings based on the feedback, learning on command to light up all of those brain regions.

[…]

Here we must pause to note that the experiment’s artificial intelligence system’s likeness to the “empathy box” in “Blade Runner” and the Philip K. Dick story on which it’s based did not escape the researchers. Yes, the system could potentially be used to subject a person’s inner feelings to interrogation by intrusive government bodies, which is really about as creepy as it gets. It could, to cite that other dystopian science fiction blockbuster, “Minority Report,” identify criminal tendencies and condemn people even before they commit crimes.

Ref: MACHINES TEACH HUMANS HOW TO FEEL USING NEUROFEEDBACK – SingularityHub

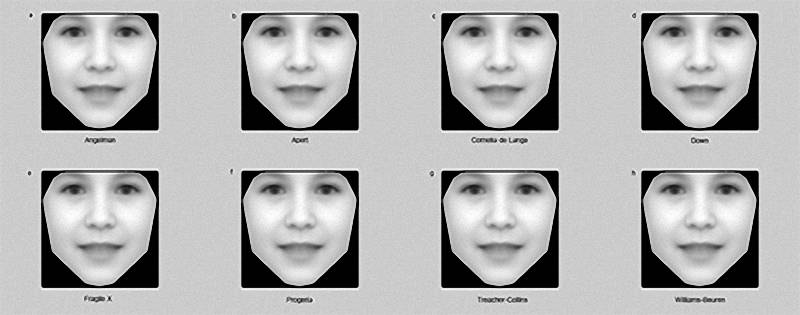

Algorithm Hunts Rare Genetic Disorders from Facial Features in Photos

Even before birth, concerned parents often fret over the possibility that their children may have underlying medical issues. Chief among these worries are rare genetic conditions that can drastically shape the course and reduce the quality of their lives. While progress is being made in genetic testing, diagnosis of many conditions occurs only after symptoms manifest, usually to the shock of the family.

A new algorithm, however, is attempting to identify specific syndromes much sooner by screening photos for characteristic facial features associated with specific genetic conditions, such as Down’s syndrome, Progeria, and Fragile X syndrome.

[…]

Nellåker added, “A doctor should in future, anywhere in the world, be able to take a smartphone picture of a patient and run the computer analysis to quickly find out which genetic disorder the person might have.”

Ref: ALGORITHM HUNTS RARE GENETIC DISORDERS FROM FACIAL FEATURES IN PHOTOS – SingularityHub

LEVAN

Unfair Advantages of Emotional Computing

Pepper is intended to babysit your kids and work the registers at retail stores. What’s really remarkable is that Pepper is designed to understand and respond to human emotion.

Heck, understanding human emotion is tough enough for most HUMANS.

There is a new field of “affect computing” coming your way that will give entrepreneurs and marketers a real unfair advantage. That’s what this note to you is about… It’s really very powerful, and something I’m thinking a lot about

Recent advances in the field of emotion tracking are about to give businesses an enormous unfair advantage.

Take Beyond Verbal, a start-up in Tel Aviv, for example. They’ve developed software that can detect 400 different variations of human “moods.” They are now integrating this software into call centers that can help a sales assistant understand and react to customer’s emotions in real time.

Better than that, the software itself can also pinpoint and influence how consumers make decisions.

Ref: UNFAIR ADVANTAGES OF EMOTIONAL COMPUTING – SingularityHub