The state plays an important role in shaping the relationship between labor and technology, and can push for the design of systems that benefit ordinary people. It can also have the opposite effect. Indeed, the history of computing in the US context has been tightly linked to government command, control, and automation efforts.

But it does not have to be this way. Consider how the Allende government approached the technology-labor question in the design of Project Cybersyn. Allende made raising employment central both to his economic plan and his overall strategy to help Chileans. His government pushed for new forms of worker participation on the shop floor and the integration of worker knowledge in economic decision-making.

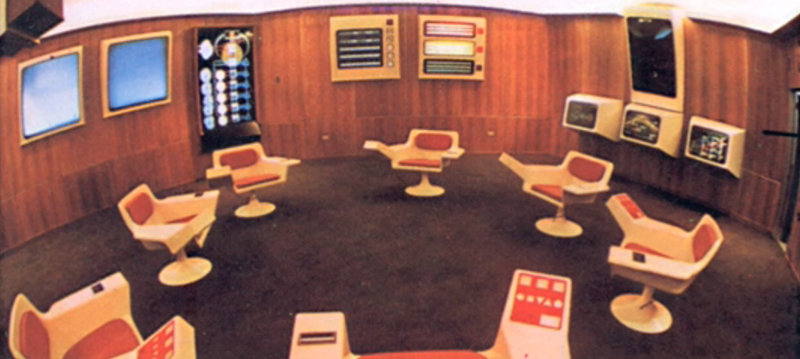

This political environment allowed Beer, the British cybernetician assisting Chile, to view computer technology as a way to empower workers. In 1972, he published a report for the Chilean government that proposed giving Chilean workers, not managers or government technocrats, control of Project Cybersyn. More radically, Beer envisioned a way for Chile’s workers to participate in Cybersyn’s design.

He recommended that the government allow workers — not engineers — to build the models of the state-controlled factories because they were best qualified to understand operations on the shop floor. Workers would thus help design the system that they would then run and use. Allowing workers to use both their heads and their hands would limit how alienated they felt from their labor.

[…]

But Beer showed an ability to envision how computerization in a factory setting might work toward an end other than speed-ups and deskilling — the results of capitalist development that labor scholars such as Harry Braverman witnessed in the United States, where the government did not have the same commitment to actively limiting unemployment or encouraging worker participation.

[…]

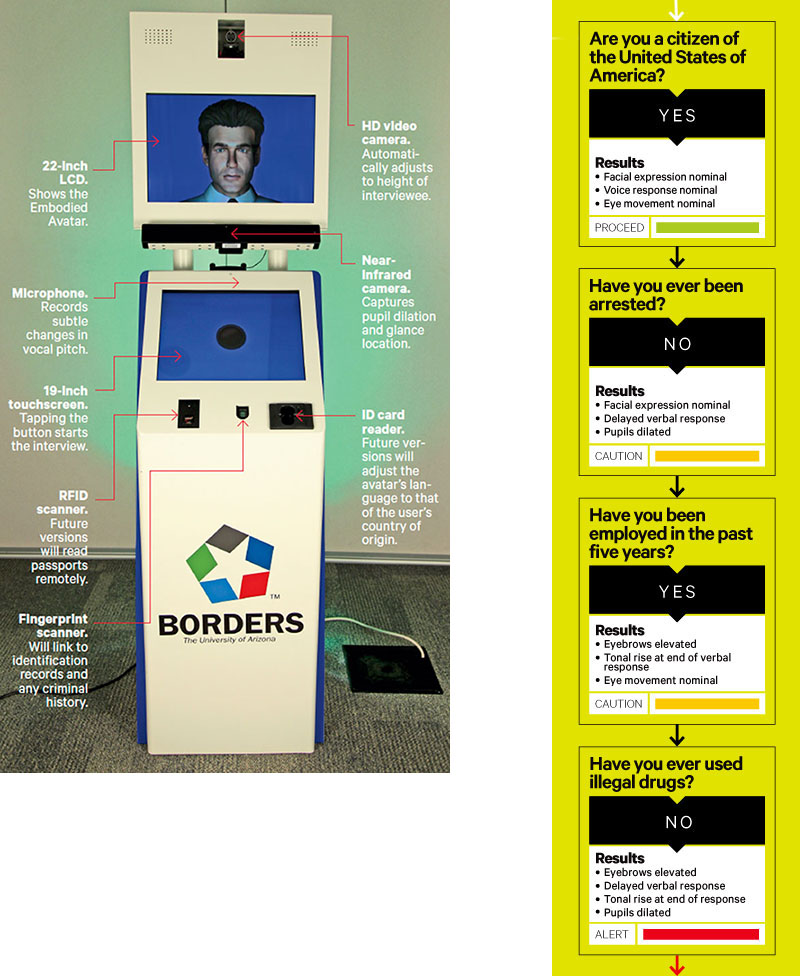

We need to be thinking in terms of systems rather than technological quick fixes. Discussions about smart cities, for example, regularly focus on better network infrastructures and the use of information and communication technologies such as integrated sensors, mobile phone apps, and online services. Often, the underlying assumption is that such interventions will automatically improve the quality of urban life by making it easier for residents to access government services and provide city government with data to improve city maintenance.

But this technological determinism doesn’t offer a holistic understanding of how such technologies might negatively impact critical aspects of city life. For example, the sociologist Robert Hollands argues that tech-centered smart-city initiatives might create an influx of technologically literate workers and exacerbate the displacement of other workers. They also might divert city resources to the building of computer infrastructures and away from other important areas of city life.

[…]

We must resist the kind of apolitical “innovation determinism” that sees the creation of the next app, online service, or networked device as the best way to move society forward. Instead, we should push ourselves to think creatively of ways to change the structure of our organizations, political processes, and societies for the better and about how new technologies might contribute to such efforts.