Now, a new study by a team of Japanese researchers shows that, in certain situations, children

are actually horrible little bratsmay not be as empathetic towards robots as we’d previously thought, with gangs of unsupervised tykes repeatedly punching, kicking, and shaking a robot in a Japanese mall.[…]

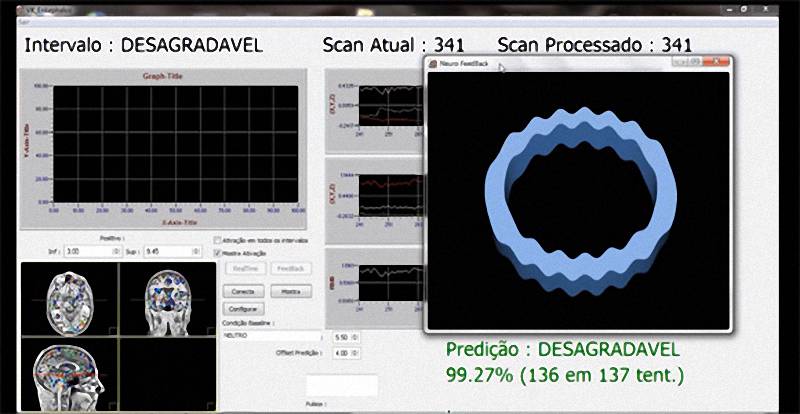

Next, they designed an abuse-evading algorithm to help the robot avoid situations where tiny humans might gang up on it. Literally tiny humans: the robot is programmed to run away from people who are below a certain height and escape in the direction of taller people. When it encounters a human, the system calculates the probability of abuse based on interaction time, pedestrian density, and the presence of people above or below 1.4 meters (4 feet 6 inches) in height. If the robot is statistically in danger, it changes its course towards a more crowded area or a taller person. This ensures that an adult is there to intervene when one of the little brats decides to pound the robot’s head with a bottle (which only happened a couple times).

Ref: Children Beating Up Robot Inspires New Escape Maneuver System – IEEE Spectrum