Yet, some people, often as the result of traumatic experiences or neglect, don’t experience these fundamental social feelings normally. Could a machine teach them these quintessentially human responses? A thought-provoking Brazilian study recently published in PLoS One suggests it could.

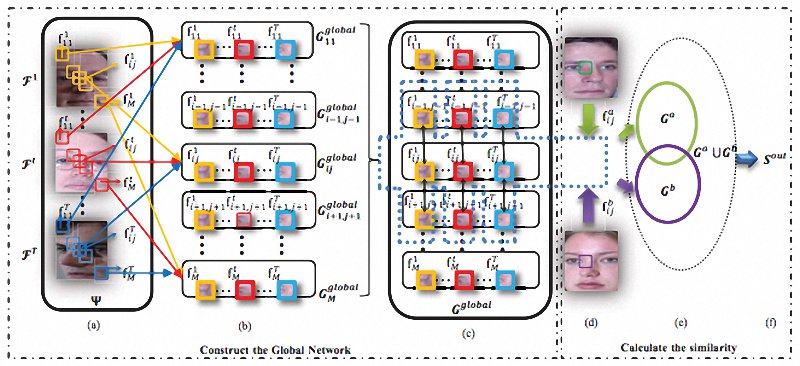

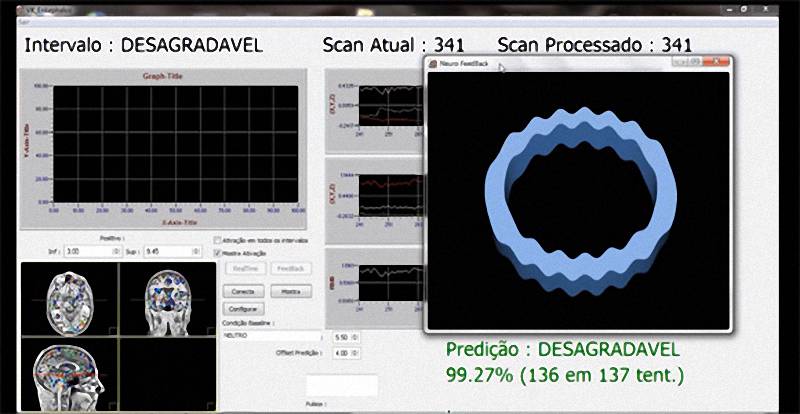

Researchers at the D’Or Institute for Research and Education outside Rio de Janeiro, Brazil, performed functional MRI scans on healthy young adults while asking them to focus on past experience that epitomized feelings of non-sexual affection or pride of accomplishment. They set up a basic form of artificial intelligence to categorize, in real time, the fMRI readings as affection, pride or neither. They then showed the experiment group a graphic form of biofeedback to tell them whether their brain results were fully manifesting that feeling; the control group saw the meaningless graphics.

The results demonstrated that the machine-learning algorithms were able to detect complex emotions that stem from neurons in various parts of the cortex and sub-cortex, and the participants were able to hone their feelings based on the feedback, learning on command to light up all of those brain regions.

[…]

Here we must pause to note that the experiment’s artificial intelligence system’s likeness to the “empathy box” in “Blade Runner” and the Philip K. Dick story on which it’s based did not escape the researchers. Yes, the system could potentially be used to subject a person’s inner feelings to interrogation by intrusive government bodies, which is really about as creepy as it gets. It could, to cite that other dystopian science fiction blockbuster, “Minority Report,” identify criminal tendencies and condemn people even before they commit crimes.

Ref: MACHINES TEACH HUMANS HOW TO FEEL USING NEUROFEEDBACK – SingularityHub