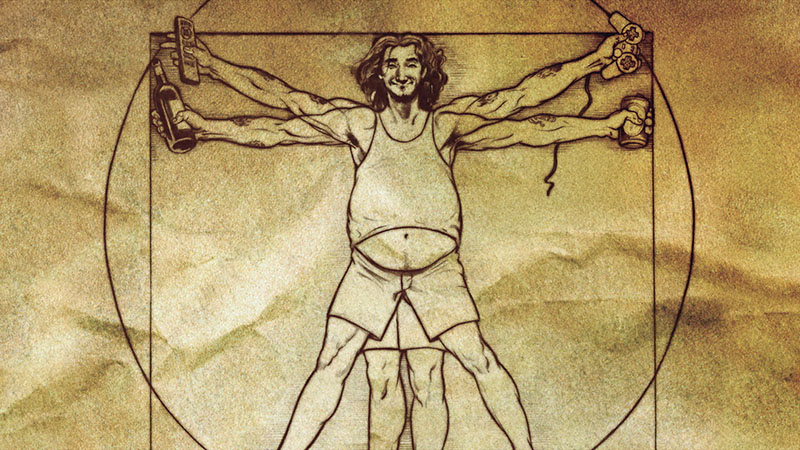

Dr. Gerald Crabtree, a geneticist at Stanford, has published a study that he conducted to try and identify the progression of modern man’s intelligence. As it turns out, however, Dr. Crabtree’s research led him to believe that the collective mind of mankind has been on more or a less a downhill trajectory for quite some time.

According to his research, published in two parts starting with last year’s ‘Our fragile intellect. Part I,’ Dr. Crabtree thinks unavoidable changes in the genetic make-up coupled with modern technological advances has left humans, well, kind of stupid. He has recently published his follow-up analysis, and in it explains that of the roughly 5,000 genes he considered the basis for human intelligence, a number of mutations over the years has forced modern man to be only a portion as bright as his ancestors.

“New developments in genetics, anthropology and neurobiology predict that a very large number of genes underlie our intellectual and emotional abilities, making these abilities genetically surprisingly fragile,” he writes in part one of his research. “Analysis of human mutation rates and the number of genes required for human intellectual and emotional fitness indicates that we are almost certainly losing these abilities,” he adds in his latest report.

From there, the doctor goes on to explain that general mutations over the last few thousand years have left mankind increasingly unable to cope with certain situations that perhaps our ancestors would be more adapted to.

“I would wager that if an average citizen from Athens of 1000 BC were to appear suddenly among us, he or she would be among the brightest and most intellectually alive of our colleagues and companions, with a good memory, a broad range of ideas, and a clear-sighted view of important issues. Furthermore, I would guess that he or she would be among the most emotionally stable of our friends and colleagues. I would also make this wager for the ancient inhabitants of Africa, Asia, India or the Americas, of perhaps 2000–6000 years ago. The basis for my wager comes from new developments in genetics, anthropology, and neurobiology that make a clear prediction that our intellectual and emotional abilities are genetically surprisingly fragile.”

According to the doctor, humans were at their most intelligent when “every individual was exposed to nature’s raw selective mechanisms on a daily basis.” Under those conditions, adaption, he argued, was much more of a matter than fight or flight. Rather, says the scientists, it was a sink or swim situation for generations upon generations.

“We, as a species, are surprisingly intellectually fragile and perhaps reached a peak 2,000 to 6,000 years ago,” he writes. “If selection is only slightly relaxed, one would still conclude that nearly all of us are compromised compared to our ancient ancestors of 3,000 to 6,000 years ago.”