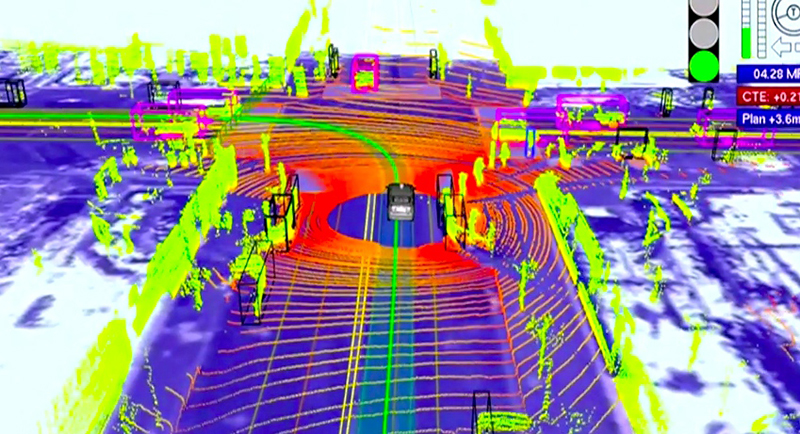

Several computers inside the car’s trunk perform split-second measurements and calculations, processing data pouring in from the sensors. Software assigns a value to each lane of the road based on the car’s speed and the behavior of nearby vehicles. Using a probabilistic technique that helps cancel out inaccuracies in sensor readings, this software decides whether to switch to another lane, to attempt to pass the car ahead, or to get out of the way of a vehicle approaching from behind. Commands are relayed to a separate computer that controls acceleration, braking, and steering. Yet another computer system monitors the behavior of everything involved with autonomous driving for signs of malfunction.

[…]

For one thing, many of the sensors and computers found in BMW’s car, and in other prototypes, are too expensive to be deployed widely. And achieving even more complete automation will probably mean using more advanced, more expensive sensors and computers. The spinning laser instrument, or LIDAR, seen on the roof of Google’s cars, for instance, provides the best 3-D image of the surrounding world, accurate down to two centimeters, but sells for around $80,000. Such instruments will also need to be miniaturized and redesigned, adding more cost, since few car designers would slap the existing ones on top of a sleek new model.

Cost will be just one factor, though. While several U.S. states have passed laws permitting autonomous cars to be tested on their roads, the National Highway Traffic Safety Administration has yet to devise regulations for testing and certifying the safety and reliability of autonomous features. Two major international treaties, the Vienna Convention on Road Traffic and the Geneva Convention on Road Traffic, may need to be changed for the cars to be used in Europe and the United States, as both documents state that a driver must be in full control of a vehicle at all times.

Most daunting, however, are the remaining computer science and artificial-intelligence challenges. Automated driving will at first be limited to relatively simple situations, mainly highway driving, because the technology still can’t respond to uncertainties posed by oncoming traffic, rotaries, and pedestrians. And drivers will also almost certainly be expected to assume some sort of supervisory role, requiring them to be ready to retake control as soon as the system gets outside its comfort zone.

[…]

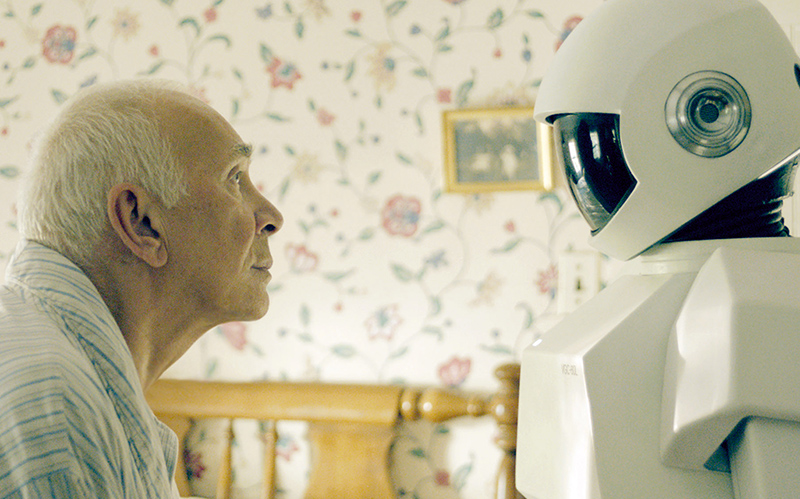

The relationship between human and robot driver could be surprisingly fraught. The problem, as I discovered during my BMW test drive, is that it’s all too easy to lose focus, and difficult to get it back. The difficulty of reëngaging distracted drivers is an issue that Bryan Reimer, a research scientist in MIT’s Age Lab, has well documented (see “Proceed with Caution toward the Self-Driving Car,” May/June 2013). Perhaps the “most inhibiting factors” in the development of driverless cars, he suggests, “will be factors related to the human experience.”

In an effort to address this issue, carmakers are thinking about ways to prevent drivers from becoming too distracted, and ways to bring them back to the driving task as smoothly as possible. This may mean monitoring drivers’ attention and alerting them if they’re becoming too disengaged. “The first generations [of autonomous cars] are going to require a driver to intervene at certain points,” Clifford Nass, codirector of Stanford University’s Center for Automotive Research, told me. “It turns out that may be the most dangerous moment for autonomous vehicles. We may have this terrible irony that when the car is driving autonomously it is much safer, but because of the inability of humans to get back in the loop it may ultimately be less safe.”

An important challenge with a system that drives all by itself, but only some of the time, is that it must be able to predict when it may be about to fail, to give the driver enough time to take over. This ability is limited by the range of a car’s sensors and by the inherent difficulty of predicting the outcome of a complex situation. “Maybe the driver is completely distracted,” Werner Huber said. “He takes five, six, seven seconds to come back to the driving task—that means the car has to know [in advance] when its limitation is reached. The challenge is very big.”

Before traveling to Germany, I visited John Leonard, an MIT professor who works on robot navigation, to find out more about the limits of vehicle automation. Leonard led one of the teams involved in the DARPA Urban Challenge, an event in 2007 that saw autonomous vehicles race across mocked-up city streets, complete with stop-sign intersections and moving traffic. The challenge inspired new research and new interest in autonomous driving, but Leonard is restrained in his enthusiasm for the commercial trajectory that autonomous driving has taken since then. “Some of these fundamental questions, about representing the world and being able to predict what might happen—we might still be decades behind humans with our machine technology,” he told me. “There are major, unsolved, difficult issues here. We have to be careful that we don’t overhype how well it works.”

Leonard suggested that much of the technology that has helped autonomous cars deal with complex urban environments in research projects—some of which is used in Google’s cars today—may never be cheap or compact enough to be employed in commercially available vehicles. This includes not just the LIDAR but also an inertial navigation system, which provides precise positioning information by monitoring the vehicle’s own movement and combining the resulting data with differential GPS and a highly accurate digital map. What’s more, poor weather can significantly degrade the reliability of sensors, Leonard said, and it may not always be feasible to rely heavily on a digital map, as so many prototype systems do. “If the system relies on a very accurate prior map, then it has to be robust to the situation of that map being wrong, and the work of keeping those maps up to date shouldn’t be underestimated,” he said.