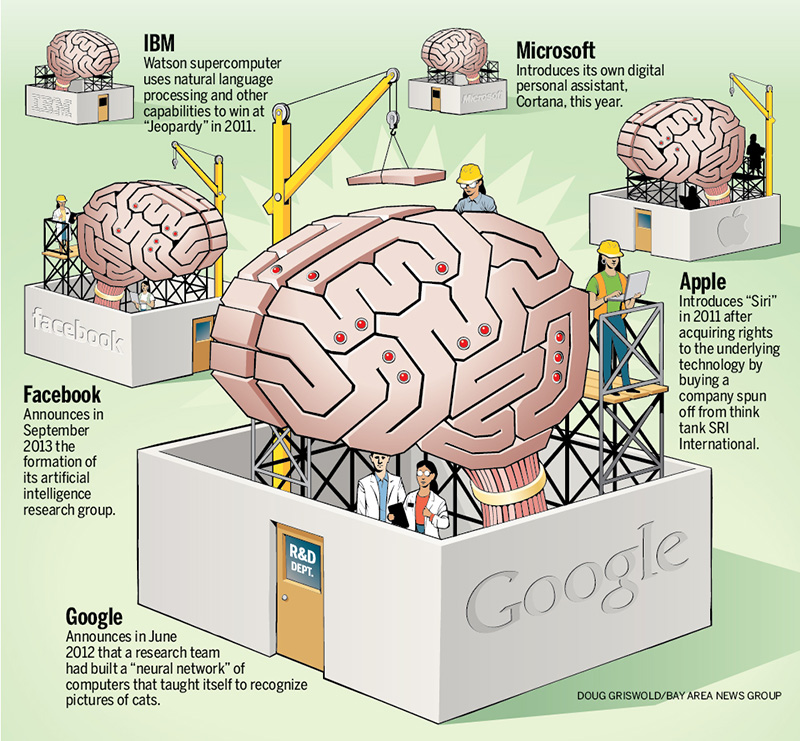

Musk and Hawking fret over an AI apocalypse, but there are more immediate threats. In the past five years, advances in artificial intelligence—in particular, within a branch of AI algorithms called deep neural networks—are putting AI-driven products front-and-center in our lives. Google, Facebook, Microsoft and Baidu, to name a few, are hiring artificial intelligence researchers at an unprecedented rate, and putting hundreds of millions of dollars into the race for better algorithms and smarter computers.

AI problems that seemed nearly unassailable justafewyears ago are now being solved. Deep learning has boosted Android’s speech recognition, and given Skype Star Trek-like instant translation capabilities. Google is building self-driving cars, and computer systems that can teach themselvestoidentifycat videos. Robot dogs can now walk very much like their living counterparts.

“Things like computer vision are starting to work; speech recognition is starting to work There’s quite a bit of acceleration in the development of AI systems,” says Bart Selman, a Cornell professor and AIethicistwho was at the event with Musk. “And that’s making it more urgent to look at this issue.”

Given this rapid clip, Musk and others are calling on those building these products to carefully consider the ethical implications. At the Puerto Rico conference, delegates signed an open letter pledging to conduct AI research for good, while “avoiding potential pitfalls.” Musk signed the letter too. “Here are all these leading AI researchers saying that AI safety is important,” Musk said yesterday. “I agree with them.”

[…]

Deciding the dosanddon’tsofscientificresearch is the kind of baseline ethical work that molecular biologists did during the 1975 Asilomar Conference on Recombinant DNA, where they agreed on safety standards designedtopreventmanmade genetically modified organisms from posing a threat to the public. The Asilomar conference had a much more concrete result than the Puerto Rico AI confab.

Ref: AI Has Arrived, and That Really Worries the World’s Brightest Minds – Wired