- Masterclass on ethically autonomous algorithms or artificial moral agents (AMAs)

- More specifically: issues raised when we attempt to design ethics into future autonomous products

- More specifically: issues raised when we attempt to design ethics into future autonomous products

- Different ways to explore these impacts, the one used for this research is speculative design

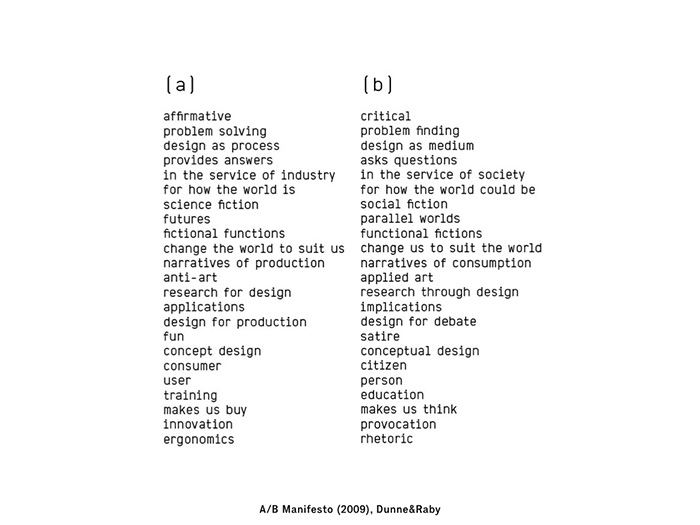

- A/B Manifesto by Dunne&Raby. (A) column is how we may usually understand design and (B) column is what speculative design is about.

- Roughly, we usually understand design as something that can solve a problem or provide an answer. Speculative design is about finding problems and asking questions.

NB: Speculative design is of course not the only design methodology falling in this (B) column.

[Further reading on speculative design: Speculative Design: Crafting the Speculation by James Auger; Speculative Everything by Dunne&Raby]

- A/B Manifesto by Dunne&Raby. (A) column is how we may usually understand design and (B) column is what speculative design is about.

- Roughly, we usually understand design as something that can solve a problem or provide an answer. Speculative design is about finding problems and asking questions.

NB: Speculative design is of course not the only design methodology falling in this (B) column.

[Further reading on speculative design: Speculative Design: Crafting the Speculation by James Auger; Speculative Everything by Dunne&Raby]

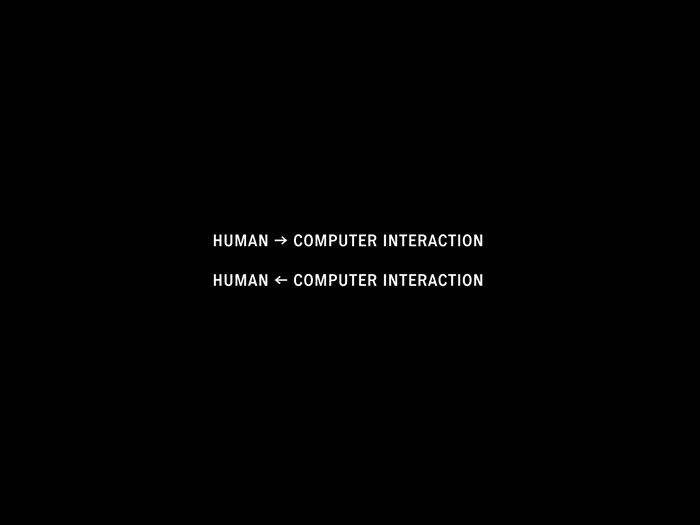

- Human-Computer Interaction (HCI) field is often about how to design a technology so it fits user's needs and desires. So Human->Computer Interaction

- What about the other way round: how technology is redesigning/reshaping our lives. Speculative design is a way to explore that point.

- What about the other way round: how technology is redesigning/reshaping our lives. Speculative design is a way to explore that point.

[End description of speculative design - Start to speak about main topic]

- Long history of objects having moral impacts over our lives.

- For instance, ultrasound scans may help us to answer moral questions regarding the lives of unborn children.

- But final decision/deliberation is made upon human agreements. Even if the technology played a role/gave its insight into the decision.

- If we take again the ultrasound scan, the decision whether to keep or not an unborn child is made by the parents/doctor...and the ultrasound scan gave its insight into that ethical decision by providing an image of the child, statistical data and so on.

- For instance, ultrasound scans may help us to answer moral questions regarding the lives of unborn children.

- But final decision/deliberation is made upon human agreements. Even if the technology played a role/gave its insight into the decision.

- If we take again the ultrasound scan, the decision whether to keep or not an unborn child is made by the parents/doctor...and the ultrasound scan gave its insight into that ethical decision by providing an image of the child, statistical data and so on.

- Examples of AMAs in our world:

- Autonomous vehicles will have to face a very complex and human world where a lot of unexpected situations may happen. In order to deal with this unexpected factor, autonomous vehicles will need some form of ethical decision-making module.

- Lethal battlefield robot, here straigtforward. The aim of the robot is to engage a target and autonomously decide whether to kill or not that target.

- Autonomous vehicles will have to face a very complex and human world where a lot of unexpected situations may happen. In order to deal with this unexpected factor, autonomous vehicles will need some form of ethical decision-making module.

- Lethal battlefield robot, here straigtforward. The aim of the robot is to engage a target and autonomously decide whether to kill or not that target.

- At some point, I believe that more mundane objects will also need some kind of ethical decision-making module because they know too much about their surrounding (ie: Internet of Things). Thus they can't take "neutral" decisions anymore.

- A bit of history: pretty much started in 1666 when Leibniz established a theory called as "De arte combinatoria", he was looking to create a general method in which all thruths of reason could be reduced to a form of calculation.

- For instance, if such method existed, philosophical questions could be solved via a calculus rather than by way of impassionate debates and discussions.

- For instance, if such method existed, philosophical questions could be solved via a calculus rather than by way of impassionate debates and discussions.

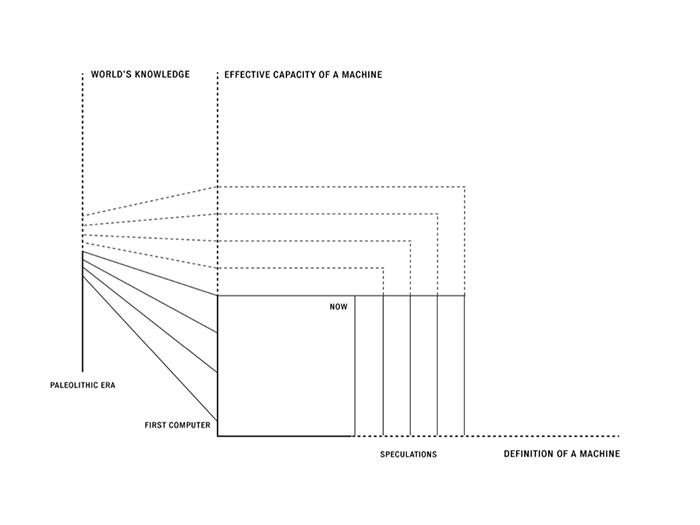

- This became very important by the 1930s - when the first computer arrived - because actually what is computable? What is calculable? Is Leibniz idea, that philosophical questions could be solved via a calculus, realistic?

- Here Turing's works are relevant to that question. Either when he tried to define computability (Church-Turing Thesis).

- And more importantly in his text Computing Machinery and Intelligence where Turing is asking "Can machine think?". Few lines after asking this question, Turing says that it is actually an irrelevant question because both 'machine' and 'thinking' can't be defined.

- Rather, his text asks 'What makes us human?'. If a machine can think then what are we as humans?

- Here Turing's works are relevant to that question. Either when he tried to define computability (Church-Turing Thesis).

- And more importantly in his text Computing Machinery and Intelligence where Turing is asking "Can machine think?". Few lines after asking this question, Turing says that it is actually an irrelevant question because both 'machine' and 'thinking' can't be defined.

- Rather, his text asks 'What makes us human?'. If a machine can think then what are we as humans?

- It's here that ethics start to be interesting because it is when technology redefine important questions such as what make us humans. More importantly it is when technology makes an assumption about what we are as humans.

- We often refer to our moral abilities as something very human. That is, we have a moral compass that helps us navigate through various life situations (ability to judge what would be good or bad to do).

- If machines are suddenly able to take ethical decisions, if what is good and what is bad can be computable, it deprives a part of what makes us human.

- We often refer to our moral abilities as something very human. That is, we have a moral compass that helps us navigate through various life situations (ability to judge what would be good or bad to do).

- If machines are suddenly able to take ethical decisions, if what is good and what is bad can be computable, it deprives a part of what makes us human.

- But are there any good arguments for saying that ethics can be computable? If yes, it would mean that ethical theories are built around mechanistic or calculable models. Is it the case?

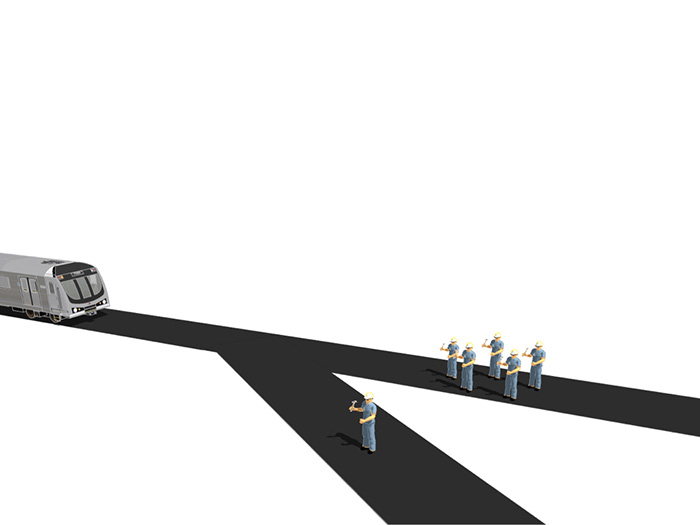

- Trolley dilemma: a trolley will kill 5 workers. You can pull a lever in order to divert the trolley and save the 5 workers. However, it will kill 1 worker (the one working on the other side).

- Trolley dilemma: a trolley will kill 5 workers. You can pull a lever in order to divert the trolley and save the 5 workers. However, it will kill 1 worker (the one working on the other side).

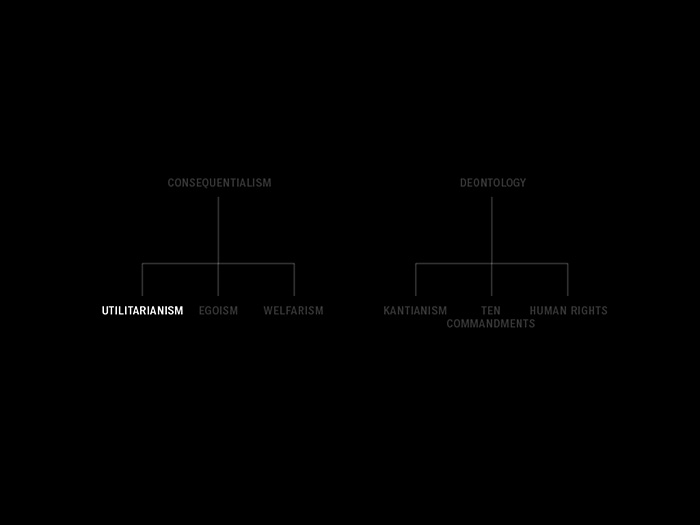

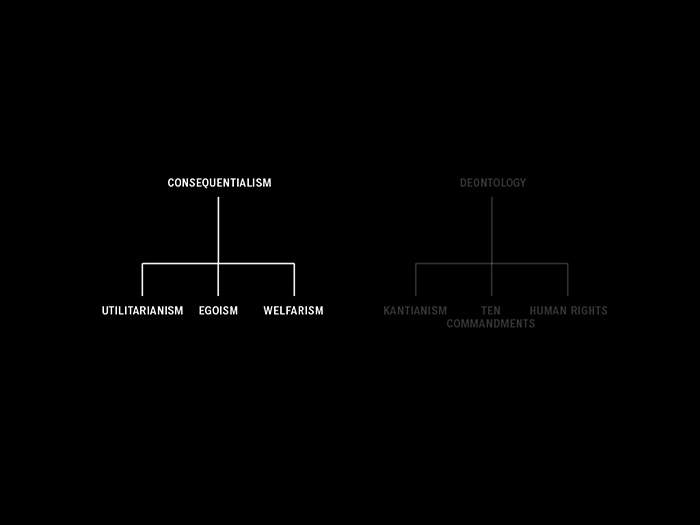

- Usually a high percentage of people answer that they would divert the trolley. Because it is better to save 5 people and sacrifice 1 rather than the other way round. 5...1...this is exactly about calculating various outputs!

- This ethical way of thinking is known as utilitarianism - that is "the greatest good for the greatest number of people" (Jeremy Bentham).

- This ethical way of thinking is known as utilitarianism - that is "the greatest good for the greatest number of people" (Jeremy Bentham).

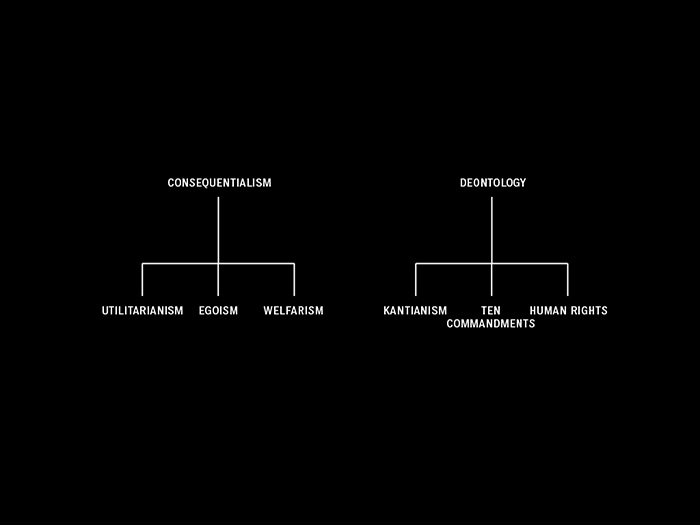

- All form of ethical theories coming down from consequentialism - that is to judge if an action is morally right by looking at its consequences - display this calculable element as they all, moreover, state the same: egoism is the greatest good for the self; altruism the greatest good for everybody except myself; and so on.

- The main counterpart of consequentialism, deontologistic ethics - that is to judge if an action is morally right by looking at an action in itself - is built around a mechanistic process.

- Most of deontologistic ethics are based around rules that have to be respected (ie: Ten Commandements in the Judeo-Christian religion).

- Most of deontologistic ethics are based around rules that have to be respected (ie: Ten Commandements in the Judeo-Christian religion).

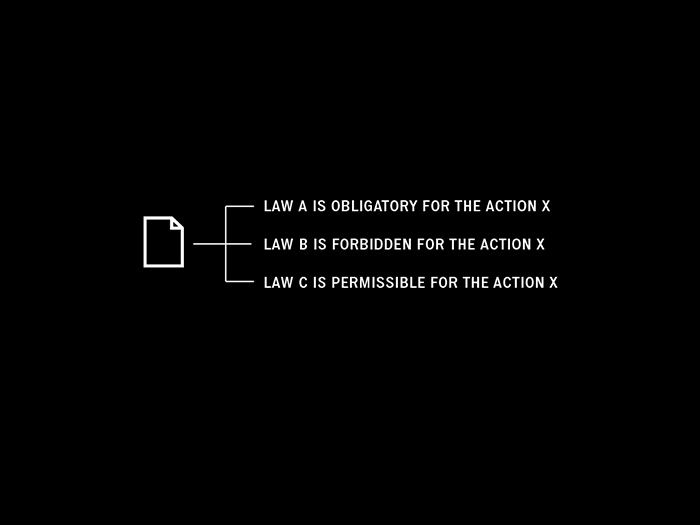

- Roughly, the system would make associations in between a case and rules. If the case fits all the rules then the action is considered as morally right.

- A part of the AMA in lethal battlefield robots is actually built around a deontologistic ethics as the robot has to follow the Rules of War (ie: Geneva Convention; First Amendment; ...).

- A part of the AMA in lethal battlefield robots is actually built around a deontologistic ethics as the robot has to follow the Rules of War (ie: Geneva Convention; First Amendment; ...).

- The fact that ethics is based around mechanistic or calculable models is a strong argument for people who believe that machines are better ethical agents than humans.

- Machines will always stick to the rules, no matter what. But us humans, we have emotions, this may make us drift apart from these rules and eventually lead us to a poor ethical decision. Roughly because we have followed our emotions rather than the rules.

- Machines will always stick to the rules, no matter what. But us humans, we have emotions, this may make us drift apart from these rules and eventually lead us to a poor ethical decision. Roughly because we have followed our emotions rather than the rules.

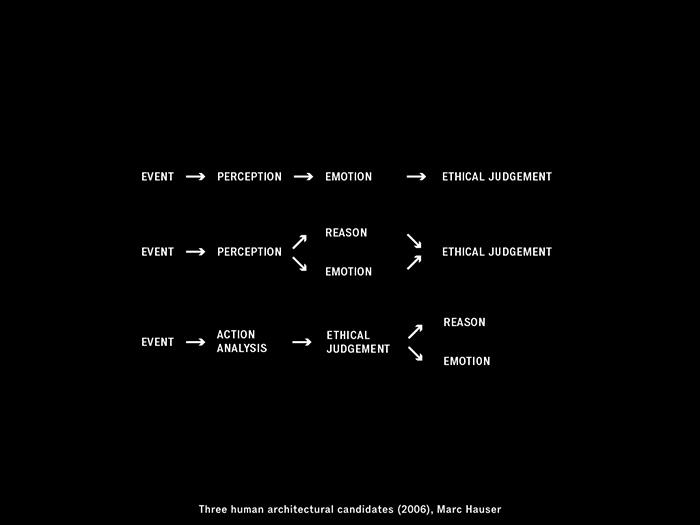

- However the fact that a good ethical decision arise from rules rather than emotion is one candidate amongst others. [on the image above, the last schema]

- Others say that a good ethical choice arise only from emotions...[first schema] or others that it is both reason and emotion that constitute a proper ethical decision [second schema].

- Others say that a good ethical choice arise only from emotions...[first schema] or others that it is both reason and emotion that constitute a proper ethical decision [second schema].

- It is there that we start going into the problematic area. Generally, it is when we try to extand what a machine is but yet it does not match both our world knowledge and what a machine can effectively do.

- In other words, if we do not really know what ethics is and how it works, how can we design systems aimed at taking ethical decisions?

- We make an assumption that a proper ethical decision is made by following rules only. But does a world where every choices are made by following rules a very desirable one?

- We could argue that our world or societies are built around rules that have to be respected. Yes and no. If I break a rule, I will first go to a court where humans will discuss, deliberate and finally take a decision. Actually, these rules act more as guidelines rather than fixed and rigid regulations.

- In other words, if we do not really know what ethics is and how it works, how can we design systems aimed at taking ethical decisions?

- We make an assumption that a proper ethical decision is made by following rules only. But does a world where every choices are made by following rules a very desirable one?

- We could argue that our world or societies are built around rules that have to be respected. Yes and no. If I break a rule, I will first go to a court where humans will discuss, deliberate and finally take a decision. Actually, these rules act more as guidelines rather than fixed and rigid regulations.

- We are going to look at three specific issues (amongst many).

- Let's think that we are in 2025 and that all vehicles in our road are fully autonomous. If we think about it, what kind of issues/problems could arise?

- Let's think that we are in 2025 and that all vehicles in our road are fully autonomous. If we think about it, what kind of issues/problems could arise?

- The first issue: ethics is very subjective and it depends upon every individual belief, culture, religion and so on.

- So how do we design something as subjective as ethics into manufactured products/goods?

- How can we find a solution to a problem without any objective answers?

[Video above is from the project Ethical Autonomous Vehicles. Autonomous cars driving in a simulated environment are confronted with various ethical dilemmas. In order to solve these, three distinct algorithms have been created].

- So how do we design something as subjective as ethics into manufactured products/goods?

- How can we find a solution to a problem without any objective answers?

[Video above is from the project Ethical Autonomous Vehicles. Autonomous cars driving in a simulated environment are confronted with various ethical dilemmas. In order to solve these, three distinct algorithms have been created].

- 5-6mn to answer to this question with your group.

[Answers in the Workshop Results section]

[Answers in the Workshop Results section]

- The second issue:

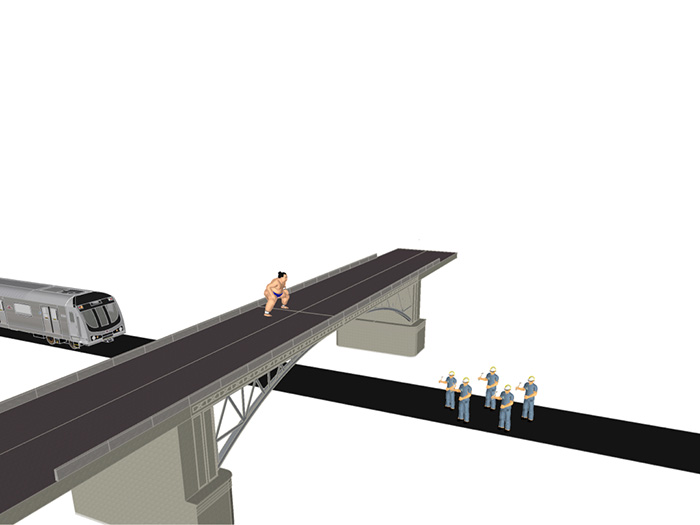

- A modified version of the Trolley Dilemma. Again a trolley is running towards 5 workers and will kill them. You are standing on a bridge, you can push a very heavy person to make him fall from the bridge. He will hit the trolley and make it stop...but the heavy person will die.

- Compared to the previous trolley dilemma, people are less keen to actively push the person.

- The only thing that differentiate both dilemmas is the degree of distance from which the act is commited (#1 we only pull a lever, #2 we actively push a guy with our hands to kill him in order to save the 5 workers).

- If the ethical task is handled by a machine, it is very convenient for us isn’t it? We do not need to take such horrible ethical decisions anymore, a machine can do that on our behalf. Our ethical responibilities are delegated to the machine.

- A modified version of the Trolley Dilemma. Again a trolley is running towards 5 workers and will kill them. You are standing on a bridge, you can push a very heavy person to make him fall from the bridge. He will hit the trolley and make it stop...but the heavy person will die.

- Compared to the previous trolley dilemma, people are less keen to actively push the person.

- The only thing that differentiate both dilemmas is the degree of distance from which the act is commited (#1 we only pull a lever, #2 we actively push a guy with our hands to kill him in order to save the 5 workers).

- If the ethical task is handled by a machine, it is very convenient for us isn’t it? We do not need to take such horrible ethical decisions anymore, a machine can do that on our behalf. Our ethical responibilities are delegated to the machine.

- 5-6mn to answer to this question with your group.

- [Answers in the Workshop Results section]

- [Answers in the Workshop Results section]

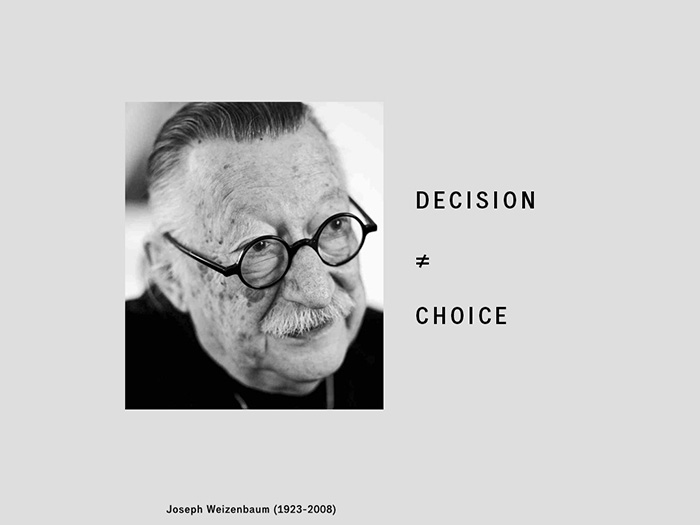

- The third issue: Difference in between choices and decisions. As stated by Joseph Weizenbaum, decisions can be computable while choices are something human as it requires wisdom, compassion, emotion, and so on.

- If a machine is taking an ethical decision, this decision is computed - there are values to it that say why this action is taken. It is about putting specific rules on what is good and what is bad to do in a given situation.

- If a lethal battlefield robot decides to kill a target it has engaged, it is because there are specific rules saying that according to inputs generated by the target, his life is not worth living...which is a pretty dramatic statement in a world where we keep bragging about equality and human's rights.

- If a machine is taking an ethical decision, this decision is computed - there are values to it that say why this action is taken. It is about putting specific rules on what is good and what is bad to do in a given situation.

- If a lethal battlefield robot decides to kill a target it has engaged, it is because there are specific rules saying that according to inputs generated by the target, his life is not worth living...which is a pretty dramatic statement in a world where we keep bragging about equality and human's rights.

- 5-6mn to answer to this question with your group.

- [Answers in the Workshop Results section]

- [Answers in the Workshop Results section]

- Why it matters to speak about such topic? [or why I think it matters]

- Techno-solutionist world, belief that technology can solve and fix all of our problems

- Somehow a new form of religion, we place our hopes in technology. Does a world where we delegate important tasks such as ethical decision-making is a better world? What better even means? Better for whom? Me? You?

- As future consumers and users of these technologies, we need to be fully aware of their social implications. Using a speculative design approach (or any kind of methodology going towards the same aim), is a way to somehow prototype the future and to have a more considerate approach for these technological developments.

- Techno-solutionist world, belief that technology can solve and fix all of our problems

- Somehow a new form of religion, we place our hopes in technology. Does a world where we delegate important tasks such as ethical decision-making is a better world? What better even means? Better for whom? Me? You?

- As future consumers and users of these technologies, we need to be fully aware of their social implications. Using a speculative design approach (or any kind of methodology going towards the same aim), is a way to somehow prototype the future and to have a more considerate approach for these technological developments.