Category Archives: T – war

Killer Robots: More talks in 2015?

Government delegates attending next week’s annual meeting of the Convention on Conventional Weapons (CCW) at the United Nations in Geneva will decide whether to continue in 2015 with multilateral talks on questions relating to “lethal autonomous weapons systems.”

Ambassador Simon-Michel chaired the 2014 experts meeting on lethal autonomous weapons systems and has identified several areas for further study or deliberation in his chair’s report, including:

- The notion of meaningful human control of autonomous weapons systems;

- The key ethical question of delegating the right to decide on life and death to a machine;

- The “various” views on the possibility that autonomous weapons would being able to comply with rules of international law and “different” views on the adequacy of existing law;

- Weapons reviews, including Article 36 of Additional Protocol I (1977) to the 1949 Geneva Conventions;

- The accountability gap, including issue of responsibility at the State level or at an individual level;

- Whether the weapons could change the threshold of use of force

Ref: Decision Time More Talks in 2015? – Campaign to Stop Killer Robots

Killer Robots

One of them is the Skunk, designed for crowd control. It can douse demonstrators with teargas.

“There could be a dignity issue here; being herded by drones would be like herding cattle,” he said.

But at least drones have a human at the controls.

“[Otherwise] you are giving [the power of life and death] to a machine,” said Heyns. “Normally, there is a human being to hold accountable.

“If it’s a robot, you can put it in jail until its batteries run flat but that’s not much of a punishment.”

Heyns said the advent of the new generation of weapons made it necessary for laws to be introduced that would prohibit the use of systems that could be operated without a significant level of human control.

“Technology is a tool and it should remain a tool, but it is a dangerous tool and should be held under scrutiny. We need to try to define the elements of needful human control,” he said.

Several organisations have voiced concerns about autonomous weapons. The Campaign to Stop Killer Robots wants a ban on fully autonomous weapons.

Ref: Stop Killer Robots While we Can – Time Live

Now The Military Is Going To Build Robots That Have Morals

The Office of Naval Research will award $7.5 million in grant money over five years to university researchers from Tufts, Rensselaer Polytechnic Institute, Brown, Yale and Georgetown to explore how to build a sense of right and wrong and moral consequence into autonomous robotic systems.

“Even though today’s unmanned systems are ‘dumb’ in comparison to a human counterpart, strides are being made quickly to incorporate more automation at a faster pace than we’ve seen before,” Paul Bello, director of the cognitive science program at the Office of Naval Research told Defense One. “For example, Google’s self-driving cars are legal and in-use in several states at this point. As researchers, we are playing catch-up trying to figure out the ethical and legal implications. We do not want to be caught similarly flat-footed in any kind of military domain where lives are at stake.”

“Even if such systems aren’t armed, they may still be forced to make moral decisions,” Bello said. For instance, in a disaster scenario, a robot may be forced to make a choice about whom to evacuate or treat first, a situation where a bot might use some sense of ethical or moral reasoning. “While the kinds of systems we envision have much broader use in first-response, search-and-rescue and in the medical domain, we can’t take the idea of in-theater robots completely off the table,” Bello said.

Some members of the artificial intelligence, or AI, research and machine ethics communities were quick to applaud the grant. “With drones, missile defines, autonomous vehicles, etc., the military is rapidly creating systems that will need to make moral decisions,” AI researcher Steven Omohundrotold Defense One. “Human lives and property rest on the outcomes of these decisions and so it is critical that they be made carefully and with full knowledge of the capabilities and limitations of the systems involved. The military has always had to define ‘the rules of war’ and this technology is likely to increase the stakes for that.”

Ref: Now The Military Is Going To Build Robots That Have Morals – DefenseOne

Role of Killer Robots

According to Heyns’s 2013 report, South Korea operates “surveillance and security guard robots” in the demilitarized zone that buffers it from North Korea. Although there is an automatic mode available on the Samsung machines, soldiers control them remotely.

The U.S. and Germany possess robots that automatically target and destroy incoming mortar fire. They can also likely locate the source of the mortar fire, according to Noel Sharkey, a University of Sheffield roboticist who is active in the “Stop Killer Robots” campaign.

And of course there are drones. While many get their orders directly from a human operator, unmanned aircraft operated by Israel, the U.K. and the U.S. are capable of tracking and firing on aircraft and missiles. On some of its Navy cruisers, the U.S. also operates Phalanx, a stationary system that can track and engage anti-ship missiles and aircraft.

The Army is testing a gun-mounted ground vehicle, MAARS, that can fire on targets autonomously. One tiny drone, the Raven is primarily a surveillance vehicle but among its capabilities is “target acquisition.”

No one knows for sure what other technologies may be in development.

“Transparency when it comes to any kind of weapons system is generally very low, so it’s hard to know what governments really possess,” Michael Spies, a political affairs officer in the U.N.’s Office for Disarmament Affairs, told Singularity Hub.

At least publicly, the world’s military powers seem now to agree that robots should not be permitted to kill autonomously. That is among the criteria laid out in a November 2012 U.S. military directive that guides the development of autonomous weapons. The European Parliament recently established a non-binding ban for member states on using or developing robots that can kill without human participation.

Yet, even robots not specifically designed to make kill decisions could do so if they malfunctioned, or if their user experience made it easier to accept than reject automated targeting.

“The technology’s not fit for purpose as it stands, but as a computer scientist there are other things that bother me. I mean, how reliable is a computer system?” Sharkey, of Stop Killer Robots, said.

Sharkey noted that warrior robots would do battle with other warrior robots equipped with algorithms designed by an enemy army.

“If you have two competing algorithms and you don’t know the contents of the other person’s algorithm, you don’t know the outcome. Anything could happen,” he said.

For instance, when two sellers recently unknowingly competed for business on Amazon, the interactions of their two algorithms resulted in prices in the millions of dollars. Competing robot armies could destroy cities as their algorithms exponentially escalated, Sharkey said.

An even likelier outcome would be that human enemies would target the weaknesses of the robots’ algorithms to produce undesirable outcomes. For instance, say a machine that’s designed to destroy incoming mortar fire such as the U.S.’s C-RAM or Germany’sMANTIS, is also tasked with destroying the launcher. A terrorist group could place a launcher in a crowded urban area, where its neutralization would cause civilian casualties.

Ref: CONTROVERSY BREWS OVER ROLE OF ‘KILLER ROBOTS’ IN THEATER OF WAR – SingularityHub

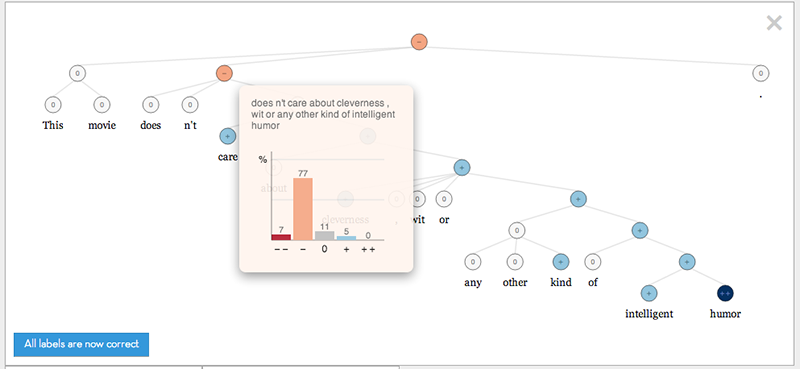

Deep Learning

“We see deep learning as a way to push sentiment understanding closer to human-level ability — whereas previous models have leveled off in terms of performance,” says Richard Socher, the Stanford University graduate student who developed NaSent together with artificial-intelligence researchers Chris Manning and Andrew Ng, one of the engineers behind Google’s deep learning project.

The aim, Socher says, is to develop algorithms that can operate without continued help from humans. “In the past, sentiment analysis has largely focused on models that ignore word order or rely on human experts,” he says. “While this works for really simple examples, it will never reach human-level understanding because word meaning changes in context and even experts cannot accurately define all the subtleties of how sentiment works. Our deep learning model solves both problems.”

Here is a live demo of their deep learning algorithm NaSent (Sentiment Analysis)

Ref: These Guys Are Teaching Computers How to Think Like People – Wired

Intelligent Robots can Behave more Ethically in the Battlefield than Humans

“My research hypothesis is that intelligent robots can behave more ethically in the battlefield than humans currently can,” said Ronald C. Arkin, a computer scientist at Georgia Tech, who is designing software for battlefield robots under contract with the Army. “That’s the case I make.”

[…]

In a report to the Army last year, Dr. Arkin described some of the potential benefits of autonomous fighting robots. For one thing, they can be designed without an instinct for self-preservation and, as a result, no tendency to lash out in fear. They can be built without anger or recklessness, Dr. Arkin wrote, and they can be made invulnerable to what he called “the psychological problem of ‘scenario fulfillment,’ ” which causes people to absorb new information more easily if it agrees with their pre-existing ideas.

His report drew on a 2006 survey by the surgeon general of the Army, which found that fewer than half of soldiers and marines serving in Iraq said that noncombatants should be treated with dignity and respect, and 17 percent said all civilians should be treated as insurgents. More than one-third said torture was acceptable under some conditions, and fewer than half said they would report a colleague for unethical battlefield behavior.

[…]

“It is not my belief that an unmanned system will be able to be perfectly ethical in the battlefield,” Dr. Arkin wrote in his report (PDF), “but I am convinced that they can perform more ethically than human soldiers are capable of.”

[…]

Daniel C. Dennett, a philosopher and cognitive scientist at Tufts University, agrees. “If we talk about training a robot to make distinctions that track moral relevance, that’s not beyond the pale at all,” he said. But, he added, letting machines make ethical judgments is “a moral issue that people should think about.”

Ref: A Soldier, Taking Orders From Its Ethical Judgment Center – NYTimes

Ref: MissionLab

Scientists Call for a Ban

The International Committee for Robot Arms Control (ICRAC), a founder of the Campaign to Stop Killer Robots, has issued a statement endorsed by more than 270 engineers, computing and artificial intelligence experts, roboticists, and professionals from related disciplines that calls for a ban on fully autonomous weapons. In the statement, 272 experts from 37 countries say that, “given the limitations and unknown future risks of autonomous robot weapons technology, we call for a prohibition on their development and deployment. Decisions about the application of violent force must not be delegated to machines.”

The signatories question the notion that robot weapons could meet legal requirements for the use of force: “given the absence of clear scientific evidence that robot weapons have, or are likely to have in the foreseeable future, the functionality required for accurate target identification, situational awareness or decisions regarding the proportional use of force.” The experts ask how devices controlled by complex algorithms will interact, warning: “Such interactions could create unstable and unpredictable behavior, behavior that could initiate or escalate conflicts, or cause unjustifiable harm to civilian populations.”

Ref: Scientists call for a ban – Campaign to stop killer robots

Soldiers are Developing Relationships with Their Battlefield Robots

Robots are playing an ever-increasing role on the battlefield. As a consequence, soldiers are becoming attached to their robots, assigning names, gender — and even holding funerals when they’re destroyed. But could these emotional bonds affect outcomes in the war zone?

Through her interviews, she learned that soldiers often anthropomorphize their robots and feel empathy towards them. Many soldiers see their robots as extensions of themselves and are often frustrated with technical limitations or mechanical issues which they project onto themselves. Some operators can even tell who’s controlling a specific robot by watching the way it moves.

“They were very clear it was a tool, but at the same time, patterns in their responses indicated they sometimes interacted with the robots in ways similar to a human or pet,” Carpenter said.

Many of the soldiers she talked to named their robots, usually after a celebrity or current wife or girlfriend (never an ex). Some even painted the robot’s name on the side. Even so, the soldiers told Carpenter the chance of the robot being destroyed did not affect their decision-making over whether to send their robot into harm’s way.

Soldiers told Carpenter their first reaction to a robot being blown up was anger at losing an expensive piece of equipment, but some also described a feeling of loss.

“They would say they were angry when a robot became disabled because it is an important tool, but then they would add ‘poor little guy,’ or they’d say they had a funeral for it,” Carpenter said. “These robots are critical tools they maintain, rely on, and use daily. They are also tools that happen to move around and act as a stand-in for a team member, keeping Explosive Ordnance Disposal personnel at a safer distance from harm.”

Ref: Soldiers are developing relationships with their battlefield robots – io9

Ref: Emotional attachment to robots could affect outcome on battlefield – University of Washington

ORCA – Organizational, Relationship, and Contact Analyzer

Analysts believe that insurgents in Afghanistan form similar networks to street gangs in the US. So the software for analysing these networks abroad ought to work just as well at home, say military researchers.

To that end, these guys have created a piece of software called the Organizational, Relationship, and Contact Analyzer or ORCA, which analyses the data from police arrests to create a social network of links between gang members.

The new software has a number of interesting features. First it visualises the networks that gang members create, giving police analysts a better insight into these organisations.

It also enables them to identify influential members of each gang and to discover subgroups, such as “corner crews” that deal in drugs at the corners of certain streets within their area.

The software can also assess the probability that an individual may be a member of a particular gang, even if he or she has not admitted membership. That’s possible by analysing that person’s relationship to other individuals who are known gang members.

The software can also find individuals known as connectors who link one gang withanother and who may play an important role in selling drugs from one group to another, for example.

Paulo and co have tested the software on a police dataset of more than 5400 arrests over a three-year period. They judge individuals to be linked in the network if they are arrested at the same time.

This dataset revealed over 11,000 relationship among. From this, ORCA created a social network consisting of 1468 individuals who are members of 18 gangs. It was also able to identify so-called “seed sets”, small groups within a gang who are highly connected and therefore highly influential.

Ref: How Military Counterinsurgency Software Is Being Adapted To Tackle Gang Violence in Mainland USA – MIT Technology Review

Ref: Social Network Intelligence Analysis to Combat Street Gang Violence – Research Paper