Category Archives: M – media refs

Actual Facebook Graph Searches

When Facebook Resurrected the Dead

Plug&Pray

Exclusive Interview With Ray Kurzweil On Future AI Project At Google

James Bridle “We Fell in Love in Coded Space”

Kevin Slavin “Those algorithms that govern our lives”

Peter Thiel Interview on the Future of Technology

Question from the audience: How could you ever design a system that responds unpredictably? A cat or gorilla responds to stimulus unpredictably. But computers respond predictably.

Peter Thiel: There are a lot of ways in which computers already respond unpredictably. Microsoft Windows crashes unpredictably. Chess computers make unpredictable moves. These systems are deterministic, of course, in that they’ve been programed. But often it’s not at all clear to their users what they’ll actually do. What move will Deep Blue make next? Practically speaking, we don know. What we do know is that the computer will play chess.

It’s harder if you have a computer that is smarter than humans. This becomes almost a theological question. If God always answers your prayers when you pray, maybe it’s not really God; maybe it’s a super intelligent computer that is working in a completely determinate way.

Question from the audience: How important is empathy in law? Human Rights Watch just released a report about fully autonomous robot military drones that actually make all the targeting decisions that humans are currently making. This seems like a pretty ominous development.

Peter Thiel: Briefly recapping my thesis here should help us approach this question. My general bias is pro-computer, pro-AI, and pro-transparency, with reservations here and there. In the main, our legal system deviates from a rational system not in a superrational way—i.e. empathy leading to otherwise unobtainable truth—but rather in subrational way, where people are angry and act unjustly.

If you could have a system with zero empathy but also zero hate, that would probably be a large improvement over the status quo.

Regarding your example of automated killing in war contexts—that’s certainly very jarring. One can see a lot of problems with it. But the fundamental problem is not the machines are killing people without feeling bad about it. The problem is simply that they’re killing people.

Question from the audience: But Human Rights Watch says that the more automated machines will kill more people, because human soldiers and operates sometimes hold back because of emotion and empathy.

Peter Thiel: This sort of opens up a counterfactual debate. Theory would seem to go the other way: more precision in war, such that you kill only actual combatants, results in fewer deaths because there is less collateral damage. Think of the carnage on the front in World War I. Suppose you have 1,000 people getting killed each day, and this continues for 3-4 years straight. Shouldn’t somebody have figured out that this was a bad idea? Why didn’t the people running things put an end to this? These questions suggest that our normal intuitions about war are completely wrong. If you heard that a child was being killed in an adjacent room, your instinct would be to run over and try to stop it. But in war, when many thousands are being killed… well, one sort of wonders how this is even possible. Clearly the normal intuitions don’t work.

One theory is that the politicians and generals who are running things are actually sociopaths who don’t care about the human costs. As we understand more neurobiology, it may come to light that we have a political system in which the people who want and manage to get power are, in fact, sociopaths. You can also get here with a simple syllogism: There’s not much empathy in war. That’s strange because most people have empathy. So it’s very possible that the people making war do not.

So, while it’s obvious that drones killing people in war is very disturbing, it may just be the war that is disturbing, and our intuitions are throwing us off.

Ref: Peter Thiel on The Future of Legal Technology, Notes Essay – Blake Masters

Decoding the Drama Around Data

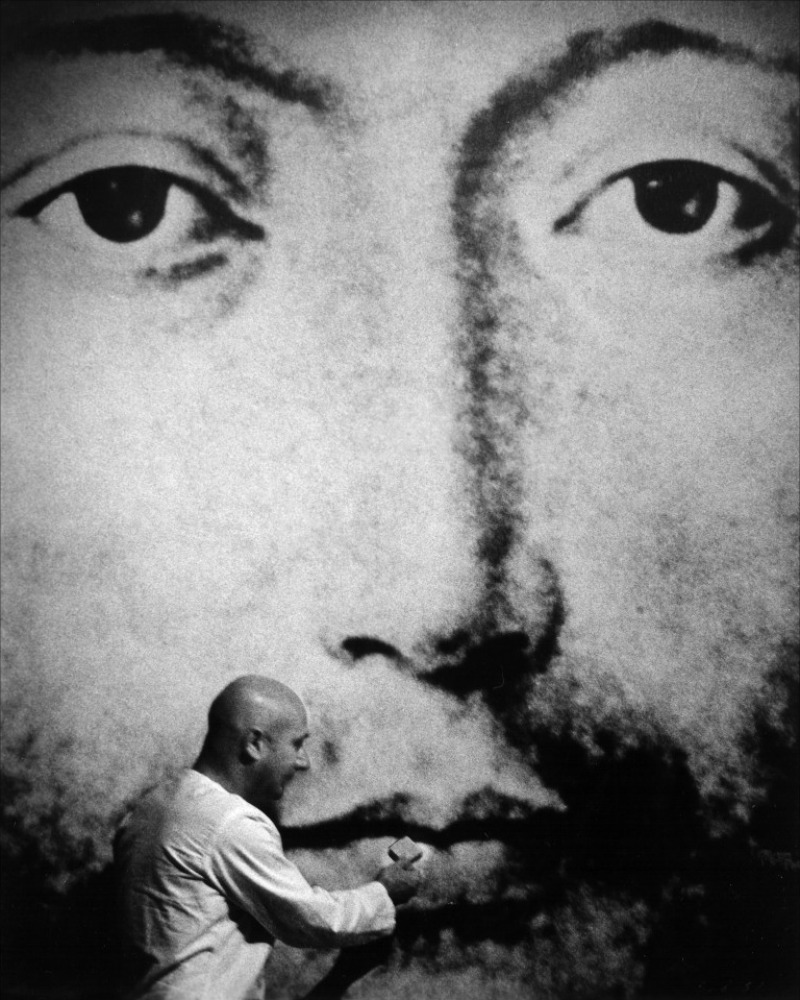

Unabomber Manifesto & THX-1138

Theodore Kaczynski, also known as the Unabomber, wrote in his manifesto about what he considers the power process and surrogate activities and how technology is a more powerful social force than freedom. In his power process, people need to have some attainable goal they have to work for in order to be fulfilled. Without the need to work to survive, a surrogate activity is needed, one that is determined by others for the good of the society. The Unabomber comes to the conclusion that not only does technology take away man’s ability to control his own power process, but that it further prevents society from shaping itself. This is exactly what happened in THX-1138 where each member of society gave up their individual freedoms to the technological society little by little until there were no individuals left.