Could software agents/bots have bias?

This question is addressed by Nick Diakopoulos in his article ‘Understanding bias in computational news media‘. Even if the article focus on algorithms related with news (ie: Google News), it is interesting to ask this question for any kind of algorithms. Could algorithms have their own politic?

Even robots have biases.

Any decision process, whether human or algorithm, about what to include, exclude, or emphasize — processes of which Google News has many — has the potential to introduce bias. What’s interesting in terms of algorithms though is that the decision criteria available to the algorithm may appear innocuous while at the same time resulting in output that is perceived as biased.

Algorithms may lack the semantics for understanding higher-order concepts like stereotypes or racism — but if, for instance, the simple and measurable criteria they use to exclude information from visibility somehow do correlate with race divides, they might appear to have a racial bias. […] In a story about the Israeli-Palestinian conflict, say, is it possible their algorithm might disproportionately select sentences that serve to emphasize one side over the other?

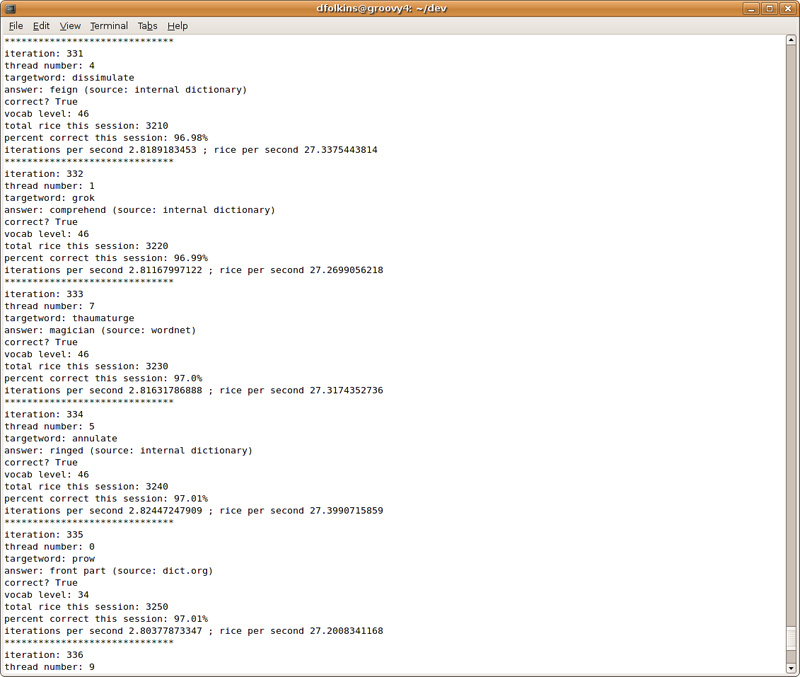

For exemple, could we say that the politic of the RiceMaker algorithm – that automates the vocabulary game on FreeRice to generate rice donations – has a ‘left-wing’ political orientation?

Ref: Understanding bias in computational news media – Nieman Journalism Lab

Ref: RiceMaker – via #algopop